It's not always easy to discern between real and staged videos on the web. That's probably never going to change, whether

TikTok ends up banned in the US or not. However, a potentially bigger issue is the ongoing rise and sophistication of deepfakes, which use AI-generated tricks to create realistic scenes and soundbytes that never occurred. On YouTube, Google announced a new measure that aims to combat this.

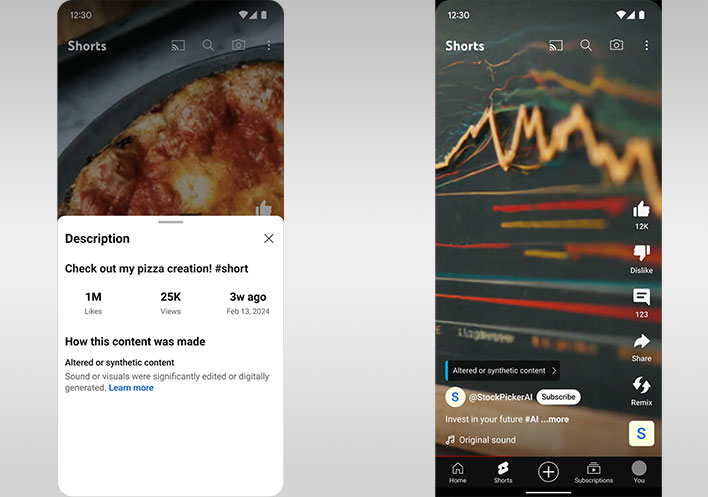

Announced in a blog post, YouTube now requires that creators attach a label to altered content that could be mistaken for depicting a real person, place, scene, or event. Google specifically mentions the use of generative AI, though it applies to any content that is "made with altered or synthetic media."

Examples include the following...

- Using the likeness of a realistic person: Digitally altering content to replace the face of one individual with another's or synthetically generating a person’s voice to narrate a video.

- Altering footage of real events or places: Such as making it appear as if a real building caught fire, or altering a real cityscape to make it appear different than in reality.

- Generating realistic scenes: Showing a realistic depiction of fictional major events, like a tornado moving toward a real town.

The updated policy and label requirement does not apply to content that Is "clearly unrealistic." Same goes for animated videos, special effects, or the use of generative AI to help with production.

Google's timing comes during a presidential election year in the United States. Current president Joe Biden will square off against former president Donald Trump this November. Both parties are active on various social media platforms, as are entities with vested interests in the outcome. The ongoing battle by big tech is to mitigate misinformation campaigns.

The new label also arrives at a time when generative AI is seemingly improving by

leaps and bounds, led by

advanced hardware and software solutions. This could potentially lead to deepfakes that might be nearly impossible to discern from the real thing by the naked eye.

"We won’t require creators to disclose if generative AI was used for productivity, like generating scripts, content ideas, or automatic captions. We also won’t require creators to disclose when synthetic media is unrealistic and/or the changes are inconsequential," YouTube states.

In addition to what's outlined in the

blog post, there's a

support page that goes into more detail on what type of content does and does not require the new label.

Top and Thumbnail Image generated by Microsoft Copilot (via Designer)