SK hynix, which notably supplies memory chips to NVIDIA (among other customers), said it has begun volume production of the world's first 12-layer high bandwidth memory 3E (HBM3E) chips in a 36GB capacity, which is the largest capacity of any existing HBM product to date. According to SK hynix, customers will get their hands on the new parts by the end of this year.

That represents a quick turnabout from its 8-layer HBM3E, which began shipping to customers for the first time just six months ago. Along with the bump in capacity, SK hynix says its 12-layer, 36GB HBM3E delivers faster speeds of up to 9.6Gbps. It also comes just two months after SK hynix

flexed GDDR7 memory at up to 40Gbps for future GPUs.

The big upshot is a boost for memory-hungry AI workloads. According to SK hynix, a single GPU equipped with four HBM3E products running Llama 3 70B, a large language model (LLM), would be capable of reading 70 billion total parameters 35 times in a single second.

"SK hynix has once again broken through technological limits demonstrating our industry leadership in AI memory," said Justin Kim, President (Head of AI Infra) at SK hynix. "We will continue our position as the No.1 global AI memory provider as we steadily prepare next-generation memory products to overcome the challenges of the AI era."

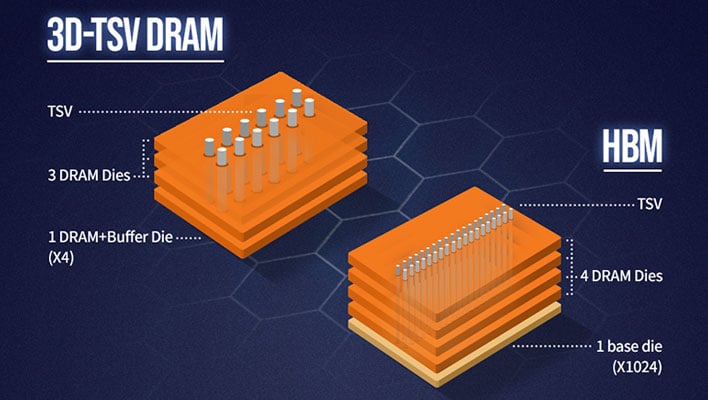

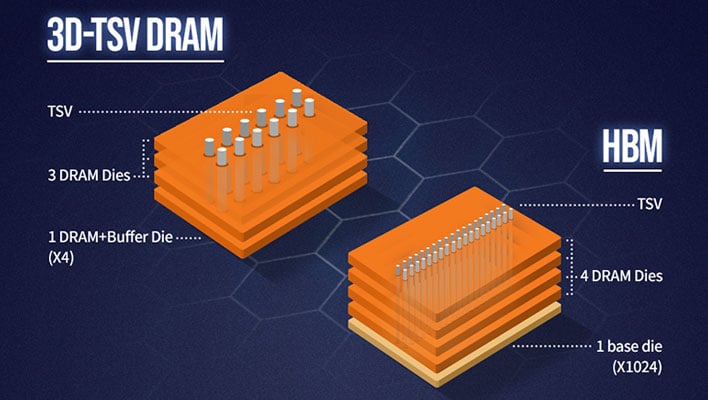

The 50% bump in capacity compared to SK hynix's previous 8-layer solution comes by way of stacking a dozen layers of 3GB DRAM chips at the same thickness as before. In order to make this feat possible, SK hynix reduced the size of each DRAM chip by 40%, and leveraged TSV (Through-Silicon Via) technology. While not new (or exclusive to SK hynix), TSV entails making perforations through the entire silicon wafer in order to form thousands of vertical interconnections from the front to back (and vice versa).

Additionally, SK hynix says it leveraged its Advanced MR-MUF process to get around structural issues that can arise when vertically stacking thinner chips. According to SK hynix, this enables a 10% improvement in heat dissipation compared to the previous generation, which in turn offers enhanced control against warping.

The timing of SK hynix's

12-layer HBM3E announcement comes as demand for AI hardware reaches a fever pitch. There's also a bit of an

arms race between SK hynix and rival Micron, the latter of which announced

12-Hi HBM3E memory stacks earlier this month, also at a 36GB capacity. Same with Samsung with its

Shinebolt memory.