OpenAI's Groundbreaking GPT-4o Teaches ChatGPT Intimate Real Time Conversation

The "o" in "GPT-4o" stands for "Omni," and it refers to GPT-4o's ability to handle nearly any kind of input "natively." You see, other multimodal AI models, including GPT-4 Turbo, require a helper model to transcribe what it sees into text form so that it can then work with that data. GPT-4o doesn't work that way. For the first time, the same model does all of the text, image, video, and audio processing, including generating those things.

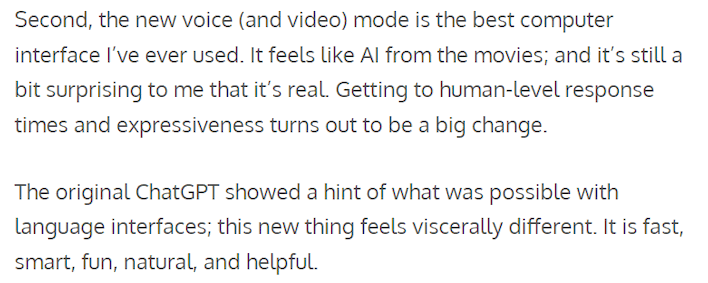

This means that when you share an image or speak to GPT-4o, it has access to all of the same information that a human does. It can see specific details in images that are hard to capture in text, like themes or moods. Likewise, when you speak to GPT-4o, it can make inferences and judgments based on the way you say things and the way your voice sounds as well as the content of what you say.

The model is so quick to respond and so natural with its responses that, in OpenAI's livestreamed stage demo, GPT-4o actually talks over the presenters a couple of times. We've embedded the demo below, where you can watch OpenAI employees chat with GPT-4o running on a smartphone. The demo really has to be seen to be believed, so we won't waste your time describing it. Just click the video, skip to around the ten minute mark, and watch.

It's largely for this reason that you can't actually play with GPT-4o like they in the video just yet. OpenAI says that GPT-4o's text and image capabilities are "rolling out" in ChatGPT, both the free tier and Plus users, but that the extremely impressive voice mode will require a Plus subscription when it becomes available "in the coming weeks."