There's been a lot of fuss this year over frame buffer memory. Is 8GB of GDDR6 RAM on a 128-bit bus really

good enough? You'd better hope so, because

that's what you get on the new GeForce RTX 4060 and one model of the RTX 4060 Ti. NVIDIA says it's just fine, though, and offered more detail in recent a blog post explaining why, along with a lot of other insight with respect to graphics memory in general.

Frankly speaking, your veteran hardware enthusiasts and technical types won't get a lot out of the article. A huge portion of it is centered around explaining the idea of what video RAM is, how it's used, and why caching can help reduce the need for

massive video memory bandwidth.

You see, NVIDIA's concerned about some negative buzz around their new GeForce cards. If you haven't heard, the GeForce RTX 4060 and its "Ti" sibling only come with 128-bit memory interfaces. As a result, NVIDIA was left with the option of shipping cards with 8GB or 16GB of memory. To be clear, we feel that 8GB of video RAM on what is quite honestly an

entry-level gaming graphics card is perfectly serviceable these days.

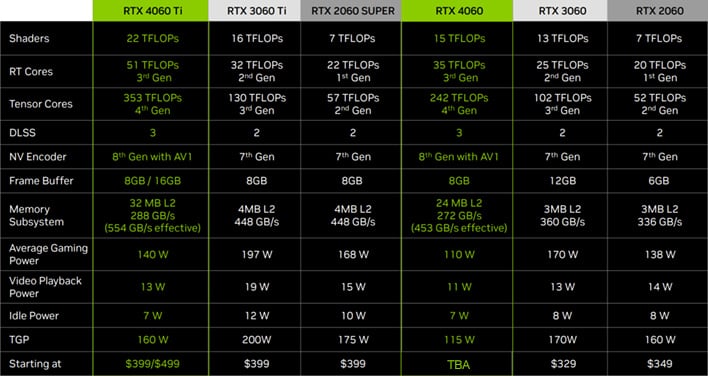

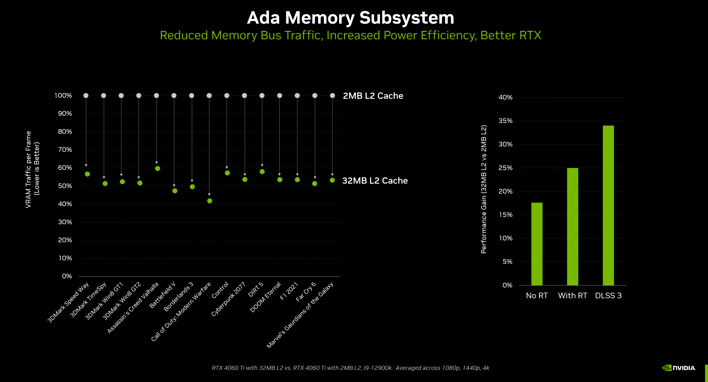

That 128-bit memory interface is still a matter of concern, though. NVIDIA goes in hard on the idea that, since the new Ada cards have eight times as much L2 cache as the RTX 2060 and RTX 3060—that's 16 times as much L2 cache per memory controller channel—it's "like" having even more memory bandwidth than the Ampere card had. To quote:

"This 50% traffic reduction allows the GPU to use its memory bandwidth 2X more efficiently. As a result, in this scenario, isolating for memory performance, an Ada GPU with 288 GB/sec of peak memory bandwidth would perform similarly to an Ampere GPU with 554 GB/sec of peak memory bandwidth."

It's absolutely true that, particularly for games, big caches can help mask the effects of a memory bandwidth disadvantage; just compare the

Radeon RX 6900 XT (512GB/sec) against the GeForce RTX 3080 (760 GB/sec). We still take issue with that statement though, because there are plenty of situations where a big-but-still-relatively-small cache of 32 megabytes is not going to help you win a race against a card with more than double the bandwidth.

One of those situations is when gaming in high resolutions. As the resolution goes up, frame buffers get bigger in an exponential fashion. Once your framebuffer can no longer fit in cache, you have to work on it in memory, and that's when memory bandwidth becomes a big deal. This is part of why AMD's Radeon RX 6000 GPUs fall off a bit against NVIDIA's competition when testing in 4K resolution.

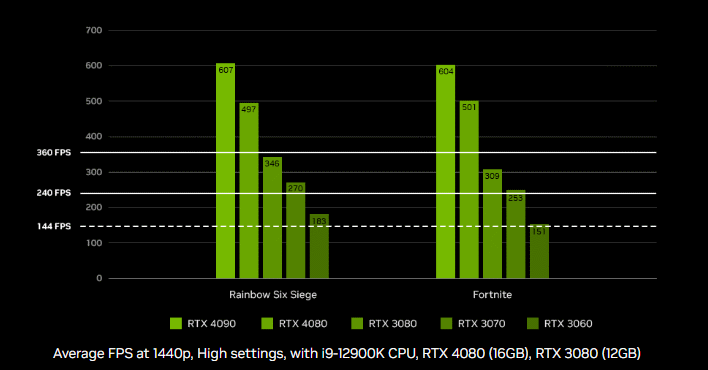

Understanding that, NVIDIA's emphasis on the idea that these cards are meant for 1080p gamers becomes a little suspect. Remember that the company has advertised both the GeForce RTX 3060 and

the RTX 2060 SUPER as being suited for gaming in 1440p resolution. Both of those GPUs are drastically less capable than a GeForce RTX 4060 in compute, so why are we falling back to 1080p?

To be fair to NVIDIA, game requirements have also increased drastically since two years ago when the GeForce RTX 3060 came out. Developers have finally started to target the current-generation consoles with their games and game engines, and the demands that these new titles place on gamers' PCs

are devastating compared to "cross-gen" titles that run playably on a measly Xbox One S.

Of course, those same elevated game requirements are exactly why gamers are grumbling about the 8GB of video RAM on the GeForce RTX 4060 and RTX 4060 Ti. NVIDIA explains that you can generally crank your game down to the "High" preset instead of "Ultra" and enjoy a fundamentally-unchanged experience with good performance. If that's not acceptable to you, well, wait until July and shell out the extra hundred bucks for the RTX 4060 Ti 16GB.

We're busily benchmarking the Geforce RTX 4060 and RTX 4060 Ti right now, and will have a review for you sooner than later. However, if you're not well-versed in the technical specifics of graphics memory,

NVIDIA's post is a pretty decent place to start learning.