Computer graphics in the modern age are already a delicate dance between CPU and GPU. Throw in the power management concerns that come from operating in a constrained environment, such as a laptop, and you add on a whole further level of complexity. Historically, we allowed each component in the system to manage its own power usage, but as it happens, that's not necessarily the best idea.

While

gaming on a machine that has a fixed power budget—like a laptop with limited cooling—you want the lion's share of the power to go to the component that is doing the most work. Usually, that means the GPU, because most games are GPU-limited. In that case, you'd ideally shut down the parts of the CPU that aren't being used and divert that power to the GPU.

That's exactly the purpose of AMD's

SmartShift technology, and both

Intel and

NVIDIA announced their latest takes on the idea back at CES two weeks ago. Intel's form is built into its Deep Link tech, while NVIDIA's 4th-generation Max-Q tech includes something called the "CPU Optimizer" that serves essentially the same function.

All this is old news, so what's the point of this article? As a part of that CPU Optimizer feature, NVIDIA quickly mentions a specific hardware block built into the GPU that it doesn't name, but instead calls "a dedicated command processor." NVIDIA says that the command processor can be used to offload work from the CPU to the GPU when it makes sense to do so.

When does it make sense to do so? Well, when the work could benefit from being run on a processor with immensely-improved physical proximity to the graphics cores themselves. One example that NVIDIA offers is command validation, a process of pointer verification and bounds-checking for GPU commands. Doing this on the CPU requires the CPU to issue commands to the GPU, adding the PCIe bus latency to every request. Moving it to a dedicated processor on the GPU could accelerate processes like this significantly.

The GSP firmware README document.

The GSP firmware README document.

All of this is pretty interesting, but it becomes even more interesting when we peer at a document buried deep in NVIDIA's Linux drivers. The README files that go with GeForce driver version 510.39 for Linux include some discussion of a device called the GPU System Processor, or GSP. The README states that the GSP can be used to "offload GPU initialization and management tasks." That sounds familiar, doesn't it?

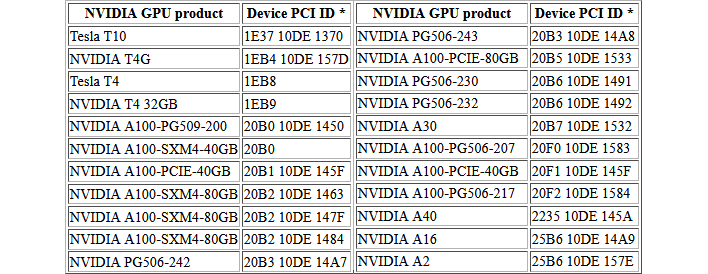

According to the README, the GSP is already enabled by default on "a few select products." Those products are listed down at the bottom of the article, and exclusively comprise Turing- and Ampere-based datacenter products, such as the NVIDIA A100 and Tesla GRID RTX T10. Before you throw a fit that your desktop GeForce part isn't included, note that NVIDIA says that while using the GSP, the driver does not support "display-related or power-management features."

The list of processors that currently support GSP operation.

Given that the supported cards are generally meant for headless compute-acceleration operation, that's not too surprising. Fortunately, NVIDIA says that these features will be added to the GSP firmware in future driver releases. That's fortunate because the README also states that more products will take advantage of the GSP in the future.

So how does this tie back to all the discussion of CPU Optimizer and laptops? Well, it's a reasonable guess that the core of the "CPU Optimizer," the so-called "dedicated command processor," may in fact be one and the same with the GPU System Processor. That's conjecture, but it makes sense. We've reached out to NVIDIA for confirmation, but we haven't heard back yet. We'll update this post if we do.