A little over five weeks ago,

NVIDIA officially introduced its long-anticipated Ampere GPU architecture to the server and high performance computing (HPC) markets. We are still waiting for Ampere to manifest in consumer graphics cards, like the

GeForce RTX 3090 and GeForce RTX 3080. Meanwhile, NVIDIA anticipates Ampere will find its way into more than 50 high performance servers by the end of the year.

According to NVIDIA, its hardware partners are placing orders for Ampere at a higher clip than they have for any previous GPU.

"Adoption of NVIDIA A100 GPUs into leading server manufacturers’ offerings is outpacing anything we’ve previously seen," said Ian Buck, vice president and general manager of Accelerated Computing at NVIDIA. "The sheer breadth of NVIDIA A100 servers coming from our partners ensures that customers can choose the very best options to accelerate their data centers for high utilization and low total cost of ownership."

NVIDIA is serving several big names, including ASUS, Cisco, Dell, Fujitsu, Gigabyte, Hewlett Packard, Lenovo, Super Micro, and others. The company anticipates its

A100 GPU will power 30 systems this summer, followed by more than 20 additional servers before the calendar year switches over.

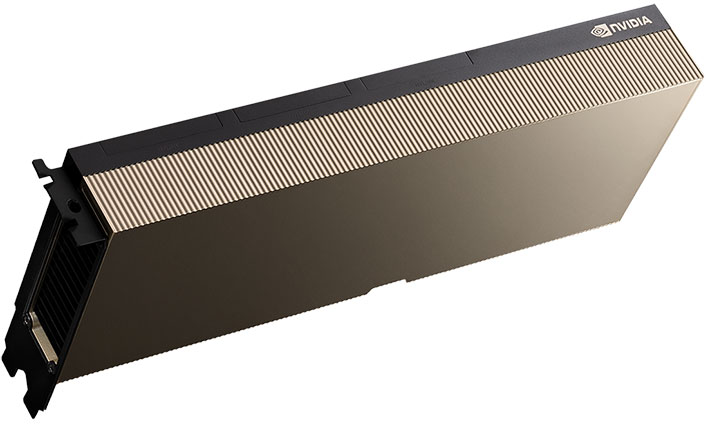

Ampere is a beastly GPU architecture built on a 7-nanometer node. The A100 specifically sports 54 billion transistors crammed into a slice of silicon with a die size of 826mm2. Other specifications include...

- FP64 CUDA Cores: 3,456

- FP32 CUDA Cores: 6,912

- Tensor Cores: 432

- Streaming Multiprocessors: 108

- FP64: 9.7 teraFLOPS

- FP64 Tensor Core: 19.5 teraFLOPS

- FP32: 19.5 teraFLOPS

It also sports 40GB of GPU memory, with 1.6TB/s of memory bandwidth.

"NVIDIA A100 GPU is a 20x AI performance leap and an end-to-end machine learning accelerator—from data analytics to training to inference," said NVIDIA founder and CEO Jensen Huang. "For the first time, scale-up and scale-out workloads can be accelerated on one platform. NVIDIA A100 will simultaneously boost throughput and drive down the cost of data centers.”

In related news, NVIDIA also unveiled the A100 in a PCI Express form factor, to complement four-way and eight-way HGX A100 configurations launched last month. This gives customers a bit more flexibility in how they ultimately adopt and utilize Ampere for their AI and scientific workloads, from single A100 GPU systems to those touting 10 more GPUs.

Good stuff, now let's hope the next round of GeForce RTX cards arrive soon.