Microsoft AI Surpasses Humans In Natural Language Understanding With SuperGLUE Benchmark

The SuperGLUE benchmark has a series of ten tasks, which include things such as question answering, use of context, premise, and other language components to test AI. Microsoft uses the example of a task with the following:

Given the premise “the child became immune to the disease” and the question “what’s the cause for this?,” the model is asked to choose an answer from two plausible candidates: 1) “he avoided exposure to the disease” and 2) “he received the vaccine for the disease.”

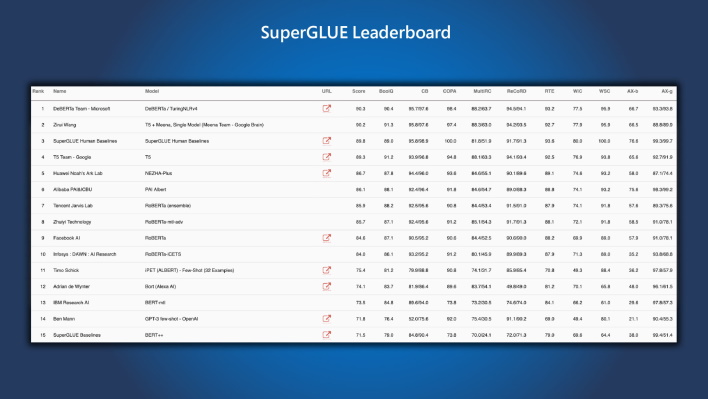

To complete the task, one must know the relationship between the premise and the options. This is incredibly simple for a human as we have all the background knowledge we need, but an AI can struggle with it. To better tackle tasks like this, Microsoft updated its AI to a larger version with 48 transformer layers and 1.5 billion parameters. In short, the deBERTa model of AI is now more performant than ever, scoring 90.3, which puts it at the top of the leaderboard. For reference, the human baseline had a score of 89.8 in third place.

Overall, what does this mean for the future of AI and its implementation in our daily lives? Well, Microsoft looks to “support products like Bing, Office, Dynamics, and Azure Cognitive Services, powering a wide range of scenarios involving human-machine and human-human interactions via natural language (such as chatbot, recommendation, question answering, search, personal assist, customer support automation, content generation, and others).” The Redmond-based company is also going to be releasing the DeBERTa model source code to the public for whatever use people can think of. Ultimately, this is a massive leap forward and “marks an important milestone toward general AI.”