Meta Backtracks On Made With AI Labels For Real Photos Following Angry Backlash

While AI continues to be the biggest buzz word in the tech sector, more people are finding new ways of editing images with AI-infused software. Being able to upscale and image, change the background, or remove unwanted objects helps people to make more appealing photos of real-life scenarios, it often happens with the aid of AI. So, when Meta began issuing “Made with AI” tags on its platforms, it often tagged real-life images edited with AI with the ominous tag. This ultimately caused a harsh backlash toward Meta’s new policy from creators using AI-infused editing software.

In a blog post, Meta remarked, “We want people to know when they see posts that have been made with AI. Earlier this year, we announced a new approach for labeling AI-generated content. An important part of this approach relies on industry standard indicators that other companies include in content created using their tools, which help us assess whether something is created using AI.”

Meta rolled out its “Made with AI” labels earlier this year, adding that it would use the “industry standard” to tag images with the new label. Before long, creators began criticizing the new label after they found images they had taken in real-life being tagged. PetaPixel eventually conducted a test of Meta’s new system following the backlash, and found if a photo was edited by even a “tiny speck,” it would trigger the “Made with AI” label.

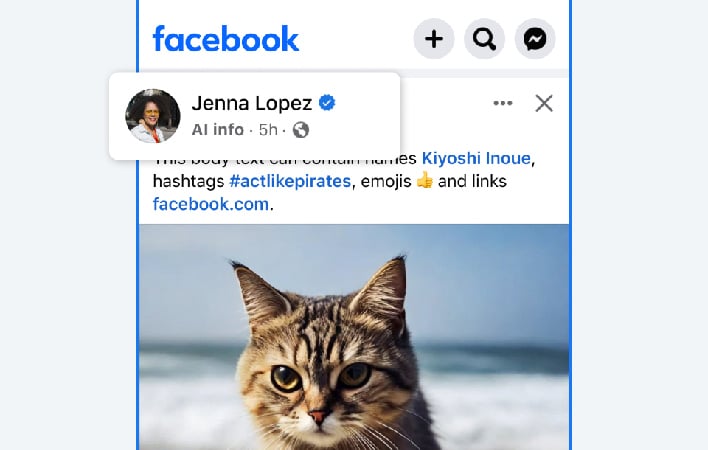

This led Meta and its Oversight Board to rethink how it labels AI-generated content. The tech giant remarked it found its labels based on “industry standards” were not “always aligned with people’s expectations and didn’t always provide enough context.” Examples included content that had been modified using AI, such as retouching tools, triggering the label to be attached to the content. This led Meta to create a new label, “AI info,” to replace the former “Made with AI,” and allowing people to click for more information.

Meta’s own Oversight Board ushered in the change with its feedback to the company that it should update its approach to reflect a broader range of content which exists in today’s AI-infused world. The Oversight Board also argued that Meta had unnecessarily restricted freedom of expression by removing manipulated images that do not otherwise violate its Community Standards. Other factors in the company’s decision to change the label and how it tags content came from “extensive public opinion surveys and consultations with academics, civil society organizations, and others, according to a Meta blog post.

The new label will cover a broader range of content in addition to the manipulated content that the Oversight Board recommended labeling. If content that is digitally created or altered images, video, or audio create a high risk of deceiving the public “on a matter of importance,” the company may add a more prominent label so users will have access to more information about the content.

Meta remarked, “Based on feedback from the Oversight Board, experts and the public, we’re taking steps we think are appropriate for platforms like ours. We want to help people know when photorealistic images have been created or edited using AI, so we’ll continue to collaborate with industry peers through forums like the Partnership on AI and remain in a dialogue with governments and civil society – and we’ll continue to review our approach as technology progresses.”

Time will tell how well the "AI info" system will work, and if it meets the standard of its users across Facebook, Instagram, and Threads.