Like most people in tech, we have oh-so-many questions about Intel's discrete GPU efforts, and if things stay on track, we will get some official answers sometime this year. Until then, we're left to nibble on

little nuggets Intel shares here and there, and scour the rumor mill for scraps of information. As it pertains to the latter, a supposedly leaked presentation slide outlining Intel's Xe GPU is making the rounds.

Just a quick refresher before we get into the meat of the rumor. For anyone who has been out of the loop for a bit, Intel is once again making a play in the discrete GPU space, something it has not done since

Larrabee, which it

scrapped a decade ago. Intel has assembled a sort of super team for its GPU division, spearheaded by

Raja Koduri, the former boss of AMD's Radeon Technologies Group who oversaw the development of Vega.

Back in December, Koduri and several

Intel engineers smiled for the camera in a photo op teasing the company's Xe graphics architecture. They wore shirts with an HP designation, presumably denoting "high performance." Whatever that part ends up being, it will be different from Intel's discrete

Xe DG1 graphic card (pictured up top).

Okay, let's get back to the rumor.

Luke Larsen at Digital Trends claims to have obtained parts of an internal presentation from Intel's data center division, with details pertaining to the Xe architecture (codenamed

Arctic Sound). Arguably the most interesting aspect of the supposed presentation is the reference to a 500-watt TDP.

We're a bit skeptical of the entire situation because it's a leak, which automatically triggers skepticism. In addition, it's that the slide deck highlights features outlined in early 2019. Even if we assume the slide is real, it is entirely possible that details have changed since it was put together.

Leaked Slide Deck Supposedly Highlights Intel's Discrete Xe Graphics

Source: Digital Trends

Source: Digital Trends

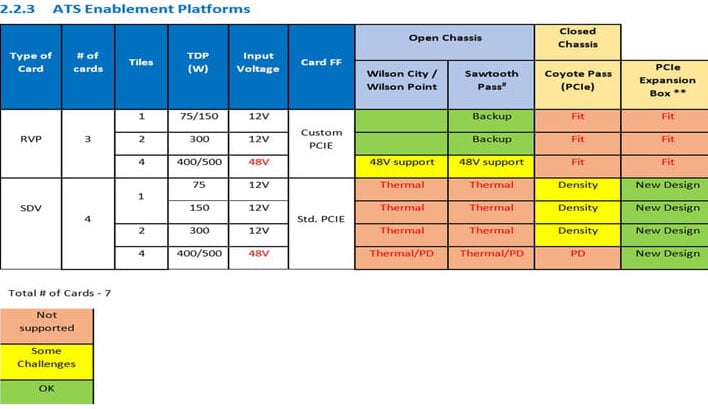

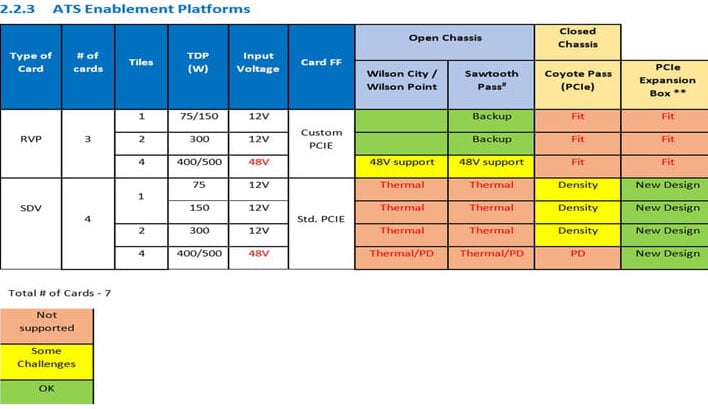

The unnamed source who shared the above slide was unable to identify some of the terminology. However, the presentation as a whole lists a single tile GPU, a two-tile graphics card, and a four-tile card at the top of the stack.

Certain details are left out, such as the number of execution units (EUs). Incidentally, the three examples conveniently align with a

past driver leak that pointed to Intel's discrete GPUs having 128, 256, and 512 EUs. If running wild with these leaks, we can guess at each tiling having 128 EUs.

These "tiles," by the way, are chiplets. It's said Intel's Xe cards will leverage Intel's

Foveros 3D stacked packaging, as the

company outlined in regards to

Ponte Vecchio, its first base GPU architecture aimed squarely at the high performance computing (HPC) and artificial intelligence (AI) markets.

According to the leaked presentation, the maxed out four-chiplet solution has a 400W or 500W TDP. This seemingly indicates Intel is going hard at the high performance market in an attempt to compete with AMD and NVIDIA. However, if the slide is real, we don't think it will be a consumer card. To put things into perspective, a reference

GeForce RTX 2080 Ti has a 250W TDP, while the overclocked Founders Edition model pushes that up slightly to 260W. Likewise, the

Radeon RX 5700 XT has a 225W TDP, while the Radeon RX 5700 XT 50th Anniversary Edition nudges that up to 235W.

The supposedly higher TDP rating outlined in the slide is not unheard of, you just have to look outside the consumer space. NVIDIA's

DGX-2 supercomputer packs 450W

Tesla V100 GPUs, which NVIDIA bills as the largest GPU ever created. Whatever the supposed slides are pointing to, it will likely find a similar home (in other words, it doesn't matter if it can run Crysis, because the 500W part is not destined for a gaming solution).

Further down the stack, however, the slide deck points to 75W and 150W single-chiplet solutions, and those could end up in the consumer space. Either of those could end up as the DG1, at least in theory. So could the two-chiplet card with a 300W TDP, though it would have to outpace the GeForce RTX 2080 Ti to be truly interesting at that power rating.

The last two things the source noted is the use of high bandwidth memory (HBM) rather than GDDR6 or GDDR5, and PCI Express 4.0 support. According to the presentation, Xe will feature HBM2e memory. It's worth noting that Samsung and Hynix have both announced HBM2e products.

Is any of this real, or is the entire slide presentation fake? It's too early to tell. However, Intel is slated to launch its first modern discrete GPU product this year, so we will find out soon enough.