Google Responds To AI Search Telling Users To Eat Rocks And Put Glue On Pizza

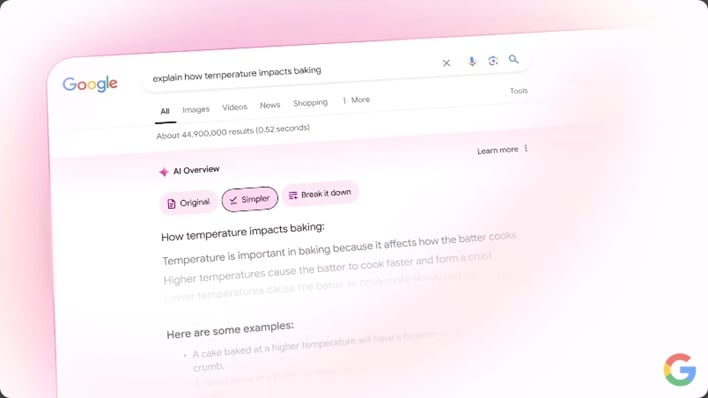

The tech giant unleashed a plethora of AI news during its recent Google I/O event. One announcement included it was bringing its AI Overview out of Google Labs and making it available to everyone in the US, with more countries coming soon. AI Overview was touted as being capable of giving users both a quick overview of a topic, and links to learn more. However, some have been saying the generative AI helper has been giving some eyebrow raising advice.

One example that has made its way across the internet was where AI Overview told one user who was searching for how to make cheese stick to pizza better. The answer Google’s AI gave was that they could use “non-toxic glue.” Another example is where the AI told a user that President James Madison graduated from the University of Wisconsin 21 times, and that a dog has played in the NBA, NFL, and NHL. Google's AI Overview also claimed that geologists suggest eating a rock everyday (which you should definitely NOT do).

In response to the failings of AI Overview, Google told the BBC they were “isolated examples.” The statement also included, “The examples we’ve seen are generally very uncommon queries, and aren’t representative of most people’s experiences.”

Google added that it had taken action in the cases that were “policy violations” and was using them to refine its AI Overview feature.

While Google may currently be in the hot seat over its AI, other companies have come under fire recently as well. Microsoft has been seeing some backlash over its upcoming Copilot+ feature, named Recall, which will take thousands of screenshots of a user’s PC screen while they are using it. The feature is meant to aid users in quickly finding things on their PC, and Microsoft says the feature is locally run on the device, so only the user will have access to the screenshots. But some are still leery of the feature, arguing that it will be a privacy and security nightmare.

There are a couple of takeaways from incidents like those concerning AI Overview. One is that AI still needs more fine tuning. Another is that people are still not ready to fully trust AI. Regardless, companies like Google and Microsoft are riding the AI wave, and will continue to push the boundaries of what is acceptable as long as it remains profitable.