Pro Esports Player Built An AI System To Help AAA Game Devs Track Toxic Gamers

GGWP is an AI system that has been developed by esports pro Dennis Fong, Crunchyroll founder Kun Gao, and Dr. George Ng of Goldsmiths, University of London and Singapore Polytechnic. It is supported by Sony Innovation Fund, Riot Games, YouTube founder Steve Chen, Twitch streamer Pokimane, and Twitch creators Emmett Shear and Kevin Lin among other investors. They have so far received $12 million in seed funding.

GGWP was created to address the toxicity issues that nearly every gamer has faced at some point or the other. Fong was personally inspired to develop the AI after years of frustration with toxicity in the gaming community. They noted to Engadget, "You feel despondent because you’re like, I’ve reported the same guy 15 times and nothing’s happened."

Fong and his associates spoke with an unnamed AAA studio during early stages of development to determine why toxicity has continued to be such a prevalent issue. The AAA studio contended that toxicity is not its fault and it is therefore not responsible for stopping it. It also insisted that there are simply too many reports and too much abuse for it to successfully manage it.

The team purportedly spoke with several other AAA studies which all claimed that they received millions of reports from players each year and could not deal with all of them. One game supposedly collected more than 200 million player-submitted reports in one year. It is estimated that AAA studios address roughly 0.1% of reports each year and that these studios tend to hire less than ten moderators to tackle the problem.

Fong noted that their team currently has 35 engineering and data scientists who are all devoted to "trying to help solve this problem." The team is hopeful that they can make an impact in gaming. Fong remarked, "The vast majority of this stuff is actually almost perfectly primed for AI to go tackle this problem. And it's just people just haven't gotten around to it yet."

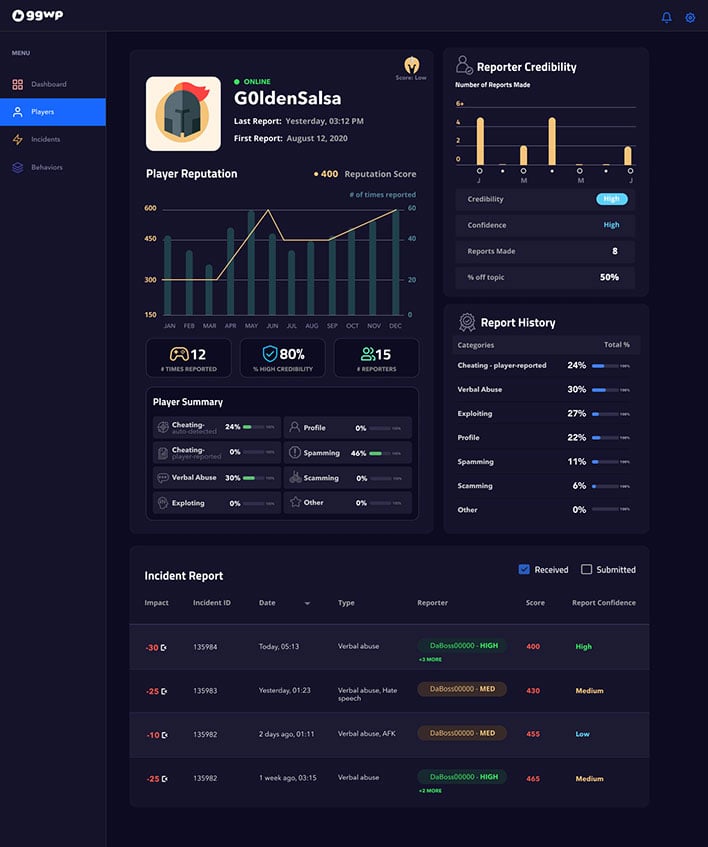

Image of Player Reputation courtesy of GGWP.