Samsung Develops AI Chip With Naver, Claims It's 8x Faster Than NVIDIA's Best Silicon

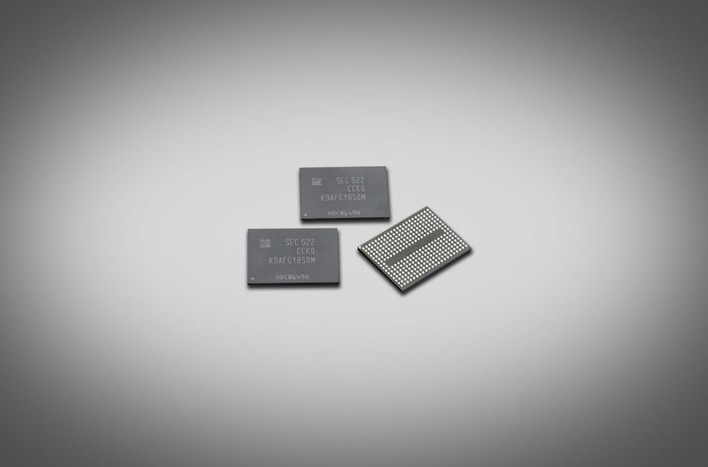

According to the two companies this type of performance is thanks in part to the way Low-Power Double Data Rate (LPDDR) DRAM is integrated into the chip. However, there was little in the way of detailed information as to how exactly the LPDDR DRAM works within this new chip that can lead to such a drastic performance improvement.

HyperCLOVA, a hyperscale language model developed by Naver, also plays a key role in these results. Naver states that it’s continuing to work on improving this model, hoping to deliver ever-increasing efficiency by bettering the compression algorithms and simplifying the model. HyperCLOVA currently sits at over 200 billion parameters.

Suk Geun Chung, Head of Naver CLOVA CIC, says that “Combining our acquired knowledge and know-how from HyperCLOVA with Samsung’s semiconductor manufacturing prowess, we believe we can create an entirely new class of solutions that can better tackle the challenges of today’s AI technologies.”

It’s not surprising to see another entrant in the AI chip business as NVIDIA continues to rake in money with its AI offerings. Having more options will be a good thing for developers looking to work with AI.