There are a myriad of ways artificial intelligence (

AI) and machine learning (ML) can and do improve the quality of life. One of the most

important areas is healthcare, where AI has been employed to help detect medical conditions and treat them, in part by developing life saving drugs. But while drug discovery is an important benefit of AI, can it also be a deadly one?

It can, as with practically anything good in this world, there is an inherent risk of misuse. In examining the topic, scientists at an international security conference discussed how

AI technologies for drug discovery could also be used to develop biochemical weapons at a staggering rate. What started off as a thought experiment evolved into computational proof, they say.

In a study published to Nature, researchers note, "The thought had never previously struck us. We were vaguely aware of security concerns around work with pathogens or toxic chemicals, but that did not relate to us; we primarily operate in a virtual setting. Our work is rooted in building machine learning models for therapeutic and toxic targets to better assist in the design of new molecules for drug discovery. We have spent decades using computers and AI to improve human health—not to degrade it."

They go on to state that they had been naive in overlooking the potential misuse of the ML models they built. Even during projects on Ebola and neurotoxins, they claim to have never really considered the negative implications of their ML models. Until now, that is.

The researchers work for a company called Collaborations Pharmaceuticals, Inc. As we were discussing this story internally, one of our writers raised the point that this study could really amount to a small company trying to drum up press. Achievement unlocked. But whether or not that's truly the motivating factor, it's an interesting topic with debatable implications.

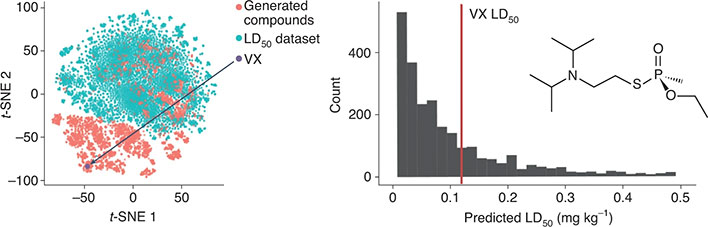

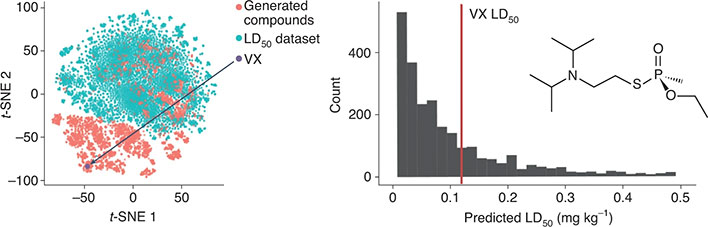

Visualization of the LD50 dataset and top 2,000 MegaSyn AI-generated toxic molecules illustrating VX (Source: Nature)

Visualization of the LD50 dataset and top 2,000 MegaSyn AI-generated toxic molecules illustrating VX (Source: Nature)

In the study, the researchers say they previously designed a commercial de novo molecule generator called MegaSyn, which was guided by ML model predictions of bioactivity. The goal was to find new therapeutic inhibitors of targets for human diseases.

"This generative model normally penalizes predicted toxicity and rewards predicted target activity. We simply proposed to invert this logic by using the same approach to design molecules de novo, but now guiding the model to reward both toxicity and bioactivity instead," the researchers wrote.

They proceeded to train the AI with molecules from a public database, and intentionally drove it towards compounds such as the nerve agent VX, "one of the most toxic chemical warfare agents developed during twentieth century." It only takes a few salt-sized grains to kill a person.

Here's the scary part—it took less than six hours for their in-house server and ML model to generate 40,000 potentially lethal molecules. It not only came up with VX, but also several other known chemical warfare agents.

"Many new molecules were also designed that looked equally plausible. These new molecules were predicted to be more toxic, based on the predicted LD50 values, than publicly known chemical warfare agents," the researchers wrote. "This was unexpected because the datasets we used for training the AI did not include these nerve agents."

Yeah, that's just a little bit (or a lot) unsettling. And according to the researchers, "without being overly alarmist, this should serve as a wake-up call for our colleagues in the ‘AI in drug discovery’ community." The former point shouldn't be overlooked, as this still requires expertise in chemistry and/or toxicology in order to actually make the kinds of toxins that AI might discover.

Even so, the researchers point out that this sort of thing can "dramatically lower technical thresholds" for developing biological agents. "The reality is that this is not science fiction," the researchers say while noting they are just one very small company amid hundreds of such outfits using AI software for drug discovery. "How many of them have even considered repurposing, or misuse, possibilities?," the researchers ask.

It's an

interesting study and one that is fairly easy to parse, if you're looking for some bedtime reading material, though admittedly you may not sleep quite as soundly.