NVIDIA Unveils Quad-Blackwell Dual-Grace GB200 NVL4 AI HPC Superchip At SC24

he H200 NVL are PCIe add-in cards with H200 Hopper accelerators that sport NVLink connectors on top. This allows you to use NVLink bridges -- like we used to do for SLI graphics cards -- with the point being that using the 900 GB/second NVLink connection allows up to four GPUs to remain memory-coherent, or in other words, you can combine the memory of all four GPUs into one big pool instead of having to replicate the data in the local memory of each card to work on it.

NVIDIA describes the H200 NVL as "AI acceleration for mainstream enterprise servers" and says that the package of four GPUs, each with 141 GB of RAM, will "fit into any data center." As NVIDIA itself points out, over 70% of enterprise deployments are air-cooled and under 20 kilowatts, which makes it much more difficult to deploy large numbers of mezzanine GPUs like the SXM version of H200. Ergo, we have these 600W GPUs for standard PCIe slots.

NVIDIA's New GB200 NVL4 Module

Now that we've talked about H200 NVL, let's talk about GB200 NVL4. The GB200 NVL4 is almost the paradigmatic opposite of the H200 NVL -- it combines two Grace CPUs and four Blackwell B200 GPUs, along with the respective memory and power delivery hardware for each processor, onto a single PCB. Each processor is connected to all of the others through NVLink, offering a combined total of 768GB of HBM3 memory. In combination with the 960GB of LPDDR5X RAM for the Grace CPUs, you're looking at a grand total of 1.5TB of RAM per board.While this might seem like a superior solution compared to the extant GB200 Superchip, since it's essentially two of those linked together, there's a key difference, and it's that the GB200 NVL4 does not have off-board NVLink capability. The GB200 Superchip can be deployed with other GB200 Superchips to form a sort of mega-GPU using NVLink connections between boards to maintain memory coherency.

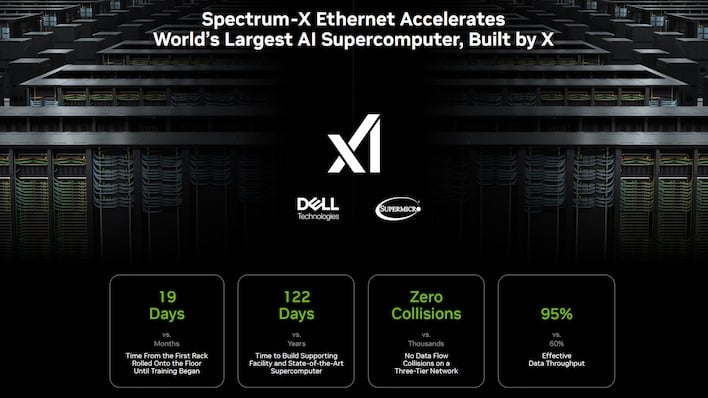

The GB200 NVL4 does not have that capability. Instead, off-board communications are handled by either Infiniband or Ethernet, likely NVIDIA's own Spectrum-X Ethernet. If we were to speculate, this could be a move by NVIDIA to better integrate with HPC providers that have their own interconnect technology, like HPE.

These GPUs are no less powerful than the original GB200, though. The total board power of the GB200 NVL4 is expected to be 5,400 watts, or 5.4 kilowatts. You throw a few of these in a rack and you're already breaking the that 20 kW barrier. NVIDIA says that, versus the GH200 NVL4 parts, the GB200 NVL4 board offers a 120% uplift in simulation, and an 80% uplift in AI training and inference workloads.

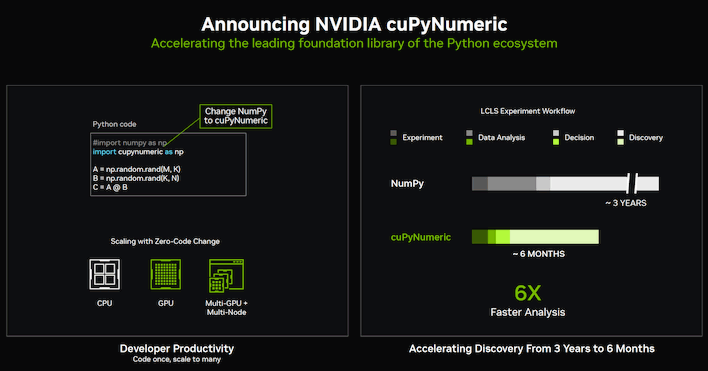

NVIDIA had many other announcements at Supercomputing 2024, but they were mostly software updates that span a wide array of markets and use cases. If you're an AI developer, you'll likely already be aware of them, but arguably the biggest one is that NVIDIA has a drop-in GPU-accelerated replacement for the popular NumPy library, called CuPyNumeric, that it claims offered a 6x performance uplift for numeric analysis at SLAC National Accelerator Laboratory.

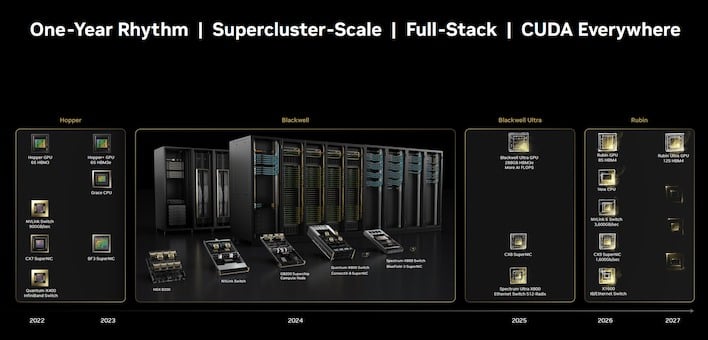

NVIDIA emphasizes that it is on a one-year release cadence, and that next year, it is planning to release Blackwell Ultra, a revision of the extant Blackwell GPUs with double the HBM3e memory onboard and a vague promise of "more AI FLOPS". Next year will also apparently see the release of upgraded networking hardware, while we'll apparently see the successor to both Grace and Blackwell, the Vera CPU and Rubin GPU, in 2026.