Microsoft Unveils Phi-4, A Small AI Model With Big Math Skills

Phi-4 is particularly impressive because it isn’t just managing to outperform other language models of a similar size, such as OpenAI’s GPT 4o-mini. It’s also managing to beat out much larger models that require far more compute. When benchmarked using math competition problems, Phi-4 has been able to beat out heavyweights such as Claude Sonnet 3.5, GPT 4o, and Google Gemini Pro 1.5.

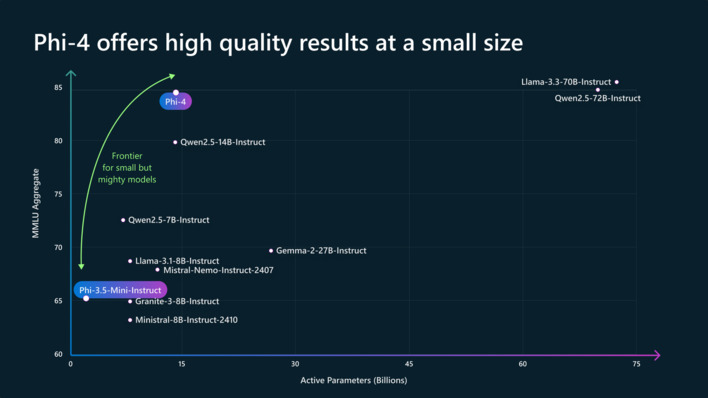

Microsoft has been able to achieve these performance gains by improving the processes the company uses to develop Phi-4. It does this by using synthetic data sets of high quality, curating organic data sets, and performing post-training with some innovative methods the company has cooked up. These advancements allow Microsoft to “push the frontier of size vs quality.”

OpenAI’s latest announcements, including offering a higher priced tier that doesn’t appear to be delivering performance that matches the jump in price, might be showing the limitation of LLMs. OpenAI has also admitted that even more compute will be needed for the company to keep pushing the limits of its models. Making Microsoft’s advancements with Phi-4 even more important.

It's great to see Microsoft shake things up with its SLMs. Having SLMs that can reduce compute requirements and keep costs under control could end up being a better option moving forward. Making it economical for artificial intelligence to continue to proliferate and different sectors of the economy.