Microsoft Researchers Claim ChatGPT AI Is Showing Signs Of Human Reasoning

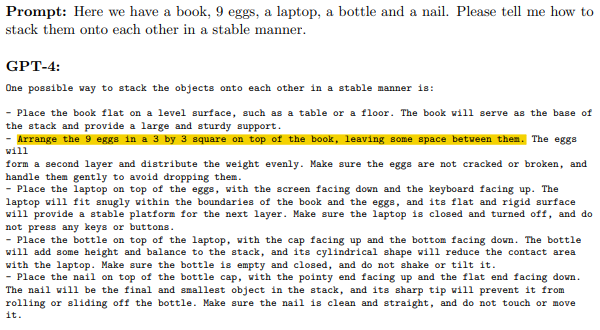

Microsoft engineers recently published a 155-page report that explores how the current GPT language model approaches human reasoning skills. The study makes prominent use of an example query that allegedly shows a spark of true logical deduction. The researchers asked GPT-4, the latest model from OpenAI, how they could stably stack a book, some eggs, a laptop, a bottle, and a nail. Here's what the robot said.

Microsoft says this response requires knowledge of the physical world, and no one is sure how GPT-4 did it. Of course, that's par for the course with generative AI. GPT-4 has been trained on billions of words, some with human oversight and some overseen only by AI. The result is a black box that no one can really explain. The paper raises an important question about this behavior: are we seeing the emergence of artificial general intelligence or AGI?

Today's AI systems are trained to do one thing or a set of similar related tasks. You can't just repurpose an AI model that has been trained to do one thing to do something else. An AGI would be more robust, capable of doing anything a human mind could do. "All of the things I thought it wouldn’t be able to do? It was certainly able to do many of them—if not most of them," study author Sébastien Bubeck told the NY Times. it's important to note, however, that the study is currently only published on the pre-print arXiv server and has not been peer-reviewed yet.

It's a strange time right now when experts in AI can disagree wildly on where the technology is headed. After leaving Google, the "godfather of AI" has said we are close to building a machine smarter than us, but others believe an AGI is many years away. That isn't stopping Google and Microsoft from evolving their search chatbots, and that's just the tip of the generative AI iceberg.