Intel Open Sources Its AI Playground, Here's What It Does

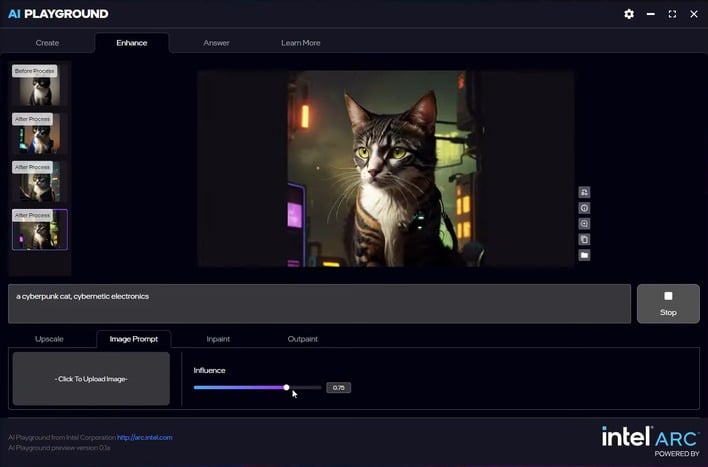

We've reported on it at length before, but if you're new to the app, AI Playground is a slick little Electron-based front-end that makes it dead simple to work with both text-oriented AI models as well as image generators, all running locally. It's not as powerful as AUTOMATIC1111 or ComfyUI, but it's much more intuitive and nicer-looking. The app supports everything from chatbots like Llama, Mistral, and Qwen2 to image diffusion models like Stable Diffusion 1.5, SDXL, and Flux.1-Schnell. You can load local models, mess with prompts and parameters, and even load in your own files to edit or augment them.

In terms of what's under the hood, the software plays nice with Safetensor PyTorch LLMs, covering models like DeepSeek R1, Phi3, Qwen2, and GGUF-formatted models like Llama 3.1 and 3.2. On the OpenVINO side, it's got support for more compact models like TinyLlama, Mistral 7B, and the Phi3 mini variants. It's a solid spread for anyone interested in experimenting with local AI workloads without having to set up a bunch of different tools and scripts, although the specific models you can actually use will depend on how much VRAM you have.

Currently, AI Playground is restricted to Intel hardware; specifically, it relies on Intel Arc GPUs and Arc-branded integrated graphics to do the heavy lifting. That's a considerable limitation right now, but since the code is now fully open-source, there's nothing stopping an ambitious dev from tweaking it to run on other hardware. If that happens, it could make an already well-regarded local AI interface even more accessible. If you're keen to start fiddling with AI Playground as a programmer or a user, grab it from Intel's Github site.