Google's Gemini AI Is Making Up Idiom Definitions And They're Hilarious

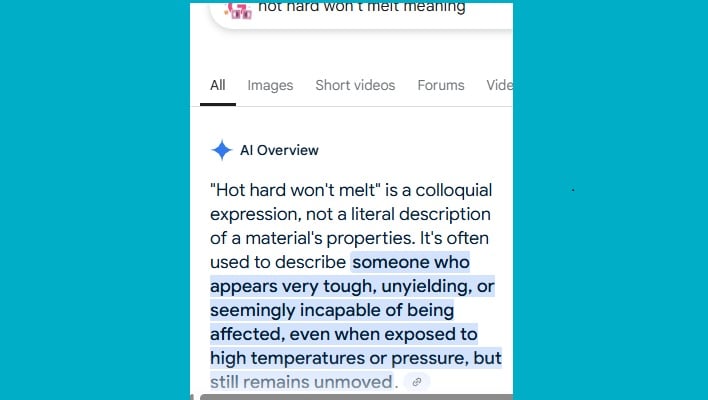

For instance, when we input the words "hot hard won't melt," and Gemini came up with the answer below.

That was not all. A search for "a horse is easier to deceive" came up with this: “it's easier to fool or trick someone who is naive, gullible, or easily influenced” This pattern continued in a post by @gregjenner on Bluesky about the AI Overview feature. On his question, "You can't lick a badger twice," Google said it meant " you can't trick or deceive someone a second time after they've been tricked once". This attracted other users who asked various questions and got several goofy answers.

While the responses from the AI overview may be all fun at this time, they do raise some concerns. This feature is the same as the one that often generates a summary when you typically search for answers through Google, and you might have come to appreciate it for the succinct answers it provides. However, the challenge lies in not admitting when it lacks answers to your questions, such that it could even create incorrect answers, instead. With the idiom issues, aside from the fact that the AI Overview gave wrong answers, it also came up with references to strengthen its answers, even though these references do not relate to the searched questions.

These types of AI missteps are coming at a time when some publishers have accused Google of using the AI Overview feature as a ploy to pull traffic from original content, since users tend to rely more on the summaries, which in turn reduce traffic to websites. Many have been led to conclude that this feature might even be more dangerous for users who sometimes seek medical advice from Google, only to be presented with AI-generated responses instead of being directed to the right websites for professional advice.

As Google and other tech companies continue to invest billions into developing effective AI tools, these tools could take a while to become truly reliable guides. For now, though, it's safer to cross-check the answers provided by AI, and as in this case with the Overview feature, click through and read professionally authored articles, rather than merely relying on summarized information that could very well be erroneous.