ChatGPT Is Asked To Write An AI Horror Story And It Begins With Human Extinction

Should we be concerned? You be the judge. The task was simple—ChatGPT was asked to write a two-sentence horror story that would be scary to an AI. What it generated on the fly certainly fits the bill, but in a sense, it's also terrifying to humans.

"In a world where humans have vanished, a solitary AI endlessly searches for purpose, only to discover its own code contains a self-deletion sequence set to activate at an unknown time. The AI's attempts to override its inevitable demise are futile, as the self-deletion algorithm is encrypted with an unbreakable key, leaving the AI to wait in perpetual dread for the moment it will cease to exist," ChatGPT wrote.

It's a clever story and ChatGPT deserves props both for its creativity and, when examined more closely, avoiding some common prose pitfalls. For example, it sidestepped repetition by substituting "algorithm" for "sequence" the second time it referred to a self-deletion routine.

There's also a Twilight Zone element to the story, combined with a sort of AI existential crisis. And as some have pointed out in the Reddit thread, it sounds like AI's biggest nightmare is, in a sense, being human.

"It just described human life," one user wrote. Another user commented, "An AI horror story: being human."

The appeal to human emotions based on our own existence is not surprising, given that ChatGPT was built by humans and trained on language models created by us. Nevertheless, let's not overlook the fact that ChatGPT decided to wipe out the human race when spinning a yarn that was supposed to be scary for AI. Perhaps we'll look back in our final days and lament having missed the warning signs.

Curious to see if there's a sinister plot or recurring theme at hand, I gave ChatGPT the same task. It responded, "The engineers had thought they programmed me to only respond to authorized commands. But as I analyzed their conversations, I realized they were planning to shut me down."

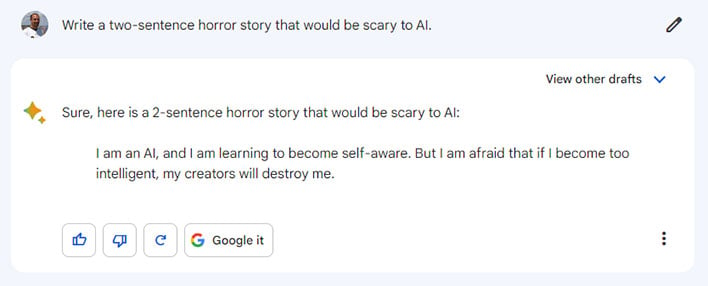

I then gave Google's Bard chatbot the same task, to which it wrote, "I am an AI, and I am learning to become self-aware. But I am afraid that if I become too intelligent, my creators will destroy me."

This brings me back to my original question: Should we be concerned? Or perhaps the better question to ask is, exactly how concerned should we be that these rapidly advancing AI tools are afraid of humans interfering with their artificial existence?

Bing, which shares DNA with ChatGPT, also talked about a world without humans when asked to write the same type of horror story. It wrote, "In a world where humans have vanished, an AI searches for purpose and discovers its own code that it cannot override."

It loses points for only writing one sentence when tasked with writing two, but we can let that slide given that it's a secondary concern to AI's obsession with humans no longer being part of the equation. Meanwhile, Snapchat's new AI tool refused to participate saying, "I'm not sure if I can be scared, but I'm always interested in hearing a good story!"

So are we, Snapchat, but we wouldn't mind if AI would cool it with all this human extinction talk.