AMD Unleashes Stable Diffusion NPU Model For Ryzen AI Laptops

This update means that devices like the ASUS ROG Flow Z13, which features a 'Strix Halo' Ryzen AI Max+ 395 chip, can now run Stable Diffusion entirely on the NPU. That SoC includes a 50 TOPS neural processor, a powerful 16-core Zen 5 CPU, and a 40 CU integrated RDNA 3.5 GPU. It's a lot of horsepower in a tight thermal envelope, and that's exactly where NPU acceleration shows its value: quiet, efficient inference without heating up the system.

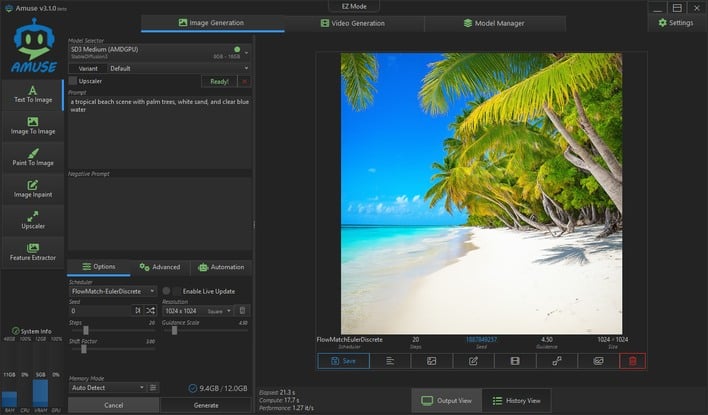

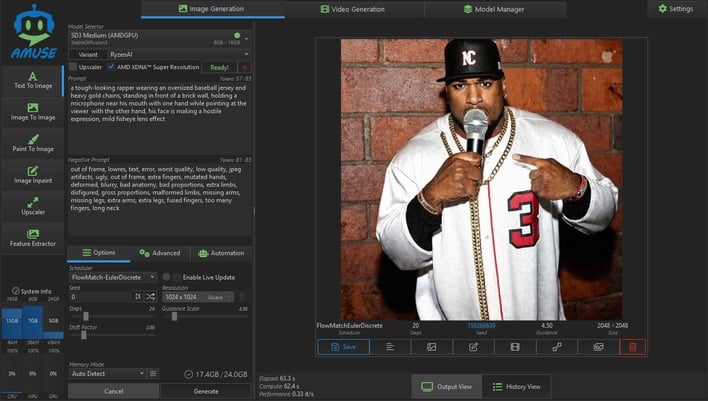

In testing, we generated 2048x2048 images on the NPU in around 70 seconds. The base resolution is one-quarter that (1 megapixel), but Amuse also includes an integrated AI upscaler that runs on the NPU, automatically enhancing images to 2048x2048 (4 megapixels) after generation. By comparison, the same generation task on the integrated GPU took roughly 30 seconds, but pushed the Flow Z13's cooling system into overdrive as the SoC hit temperatures of 95℃. The NPU's efficiency may not win speed records, but it does serve to preserve the portability and acoustics of thin-and-light form factors.

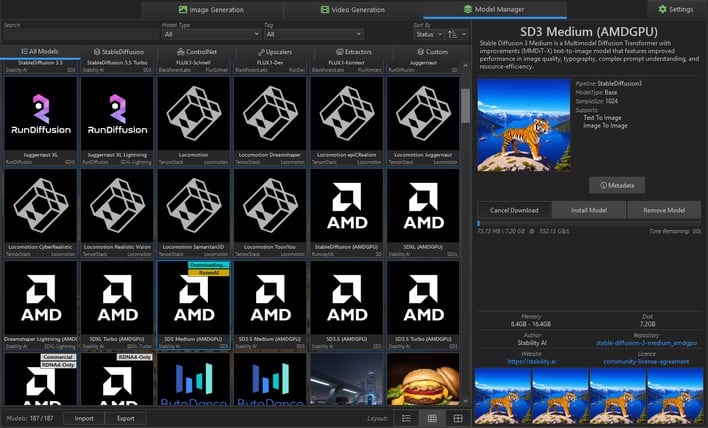

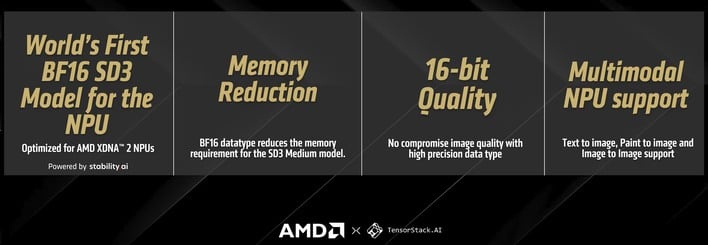

Model support is currently limited to two options: a Ryzen AI-optimized build of Stable Diffusion 3 Medium, and the much faster SDXL Turbo. Both are converted into AMD's block FP16 format, which reduces memory usage while preserving output quality. Users can still browse and download other SD models through Amuse, but only these two currently run on the NPU.

In terms of output quality, SD3 Medium delivers decent results, though some users may find older SDXL-based models both more responsive and visually compelling—particularly when running on faster GPUs. Still, this launch isn't about competing with high-end desktop performance; it's a demonstration that mobile-class NPUs can now handle real generative AI workloads on-device, with no cloud dependency and minimal battery drain.

This is clearly a first step, not the final word. Broader model support and faster generation speeds would make Ryzen AI an even more compelling platform for creative workflows. Using Stable Diffusion models is an interative process, and 70 seconds per image is not really practical for creative use. For now, it's an interesting and impressively efficient glimpse into where local AI generation may be heading.

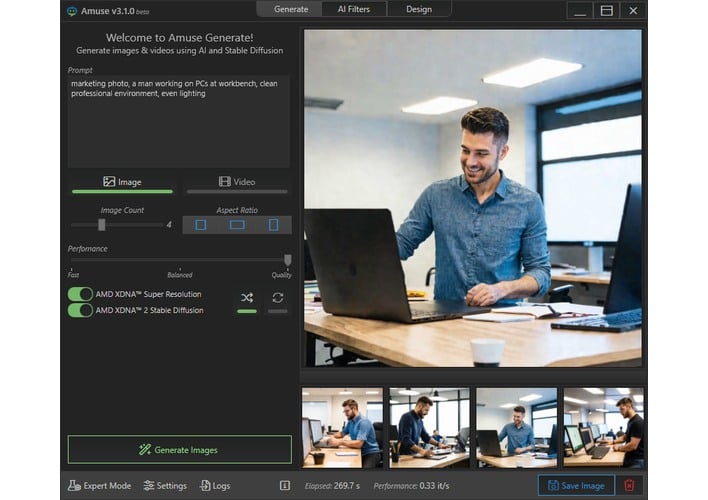

To try out the new NPU-based generation you'll need a Ryzen AI 300-series SoC and at least 24GB of RAM, although Amuse also supports local AI image generation on CPUs and GPUs from any vendor. If you've never messed with local AI image generation before, Amuse includes an intuitive, easy-to-use interface that includes a model download tool, so it's very beginner-friendly. You can download the app at its developers' website, and if you're struggling to get decent results, AMD's blog post has some prompting tips.