Even Google Is Warning Its Own Employees About The Use Of AI Chatbots

Earlier this week reports that Alphabet was warning its employees about AI chatbots made the rounds. It was said that the Mountain View-based company had begun to warn its employees about entering confidential information into AI chatbots with respect to policies on protecting company information. This sort of warning should not come as a surprise, as researchers have found ways to extract information from training datasets used for large language models like ChatGPT.

For example, in some of this research, Gmail’s AI completion of emails is cited because the model is trained on private communications between people. Thus, if an attacker managed to extract information from this training set through the AI tool, it would compromise the confidentiality of said information. Besides simple information, per a Reuters report, Alphabet has also warned its engineers not to use code from a chatbot, or at least not directly.

Beyond the scope of Alphabet and Google, this could be taken as a warning to the general public. We know that chatbots can be confidently wrong or regurgitate information that could be incorrect, such as code samples. Thus, while these tools may be useful in many circumstances, they should be a starting point rather than a full task replacement utility.

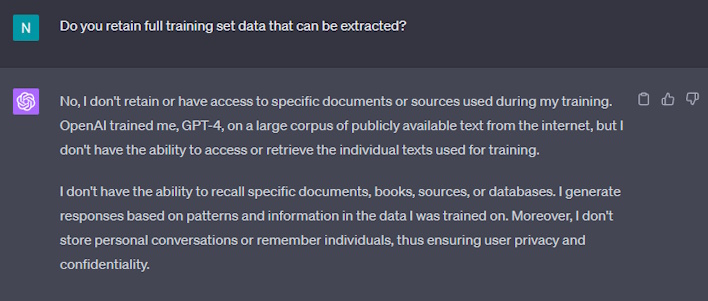

At the end of the day, though, chatbots are somewhat of a black box and an unknown factor regarding data confidentiality and integrity. Therefore, it would be wise to treat them as such and refrain from adopting new workflows and features simply because others are doing it.