Users Freak Out As Slack Is Caught Snooping Messages To Train Its AI

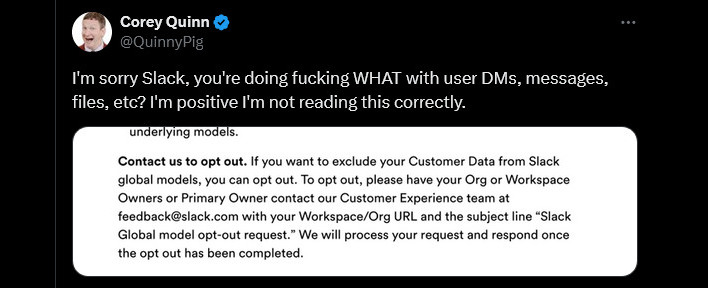

Slack, bought by Salesforce in 2021, advertises itself as an AI-powered platform for work which brings all the user’s conversations, apps, and customers together in one place. However, it turns out those AI-powered features, also needs access to a myriad of user data, as Corey Quinn pointed out on X/Twitter recently.

Quinn shares a screenshot of part of Slack’s data management policy, as another user remarked was specifically part of the “Privacy, principles, search, learning and artificial intelligence.”

After Quinn posted his tweet, others began chiming in. X user @kepano remarked, “it is an inevitability if you don’t have sovereignty over your data.” While Steven Tey remarked, “This is wild, thanks for sharing! Still baffled that they would require you to send an email to opt-out of selling your data.”

Slack was not silent throughout the X thread, remarking in part, “Hello from Slack! To clarify, Slack has platform-level machine-learning models for things like channel and emoji recommendations and search results. And yes, customers can exclude their data from helping train those (non-generative) ML models. Customer data belongs to the customer.” The reply also adds that Slack “does not build or train models in such a way that could learn, memorize, or be able to reproduce some part of customer data.”

It seems the ire is mainly on Slack opting users in automatically, and then requiring them to email Slack HQ to opt-out. X user @xkarasy highlighted this when she posted, “Instead of asking customers to email to opt-out, the default should have been opt-out. Then, ask the customer to email to opt-in.”

As others have pointed out in recent days, Slack’s policies are misleading at best, stating that user’s data is safe from AI training. Anyone who would like to read more on Slack AI can do so here.

**Update 5/20/2024 4:45pm EST: A Salesforce spokesperson reached out to HotHardware with the following statement:

Some Slack community members have asked for more clarity regarding our privacy principles. We’ve updated those principles to better explain the relationship between customer data and generative AI in Slack, and published a blog post to provide more context.

To clarify:

- Slack has industry-standard platform-level machine learning models to make the product experience better for customers, like channel and emoji recommendations and search results. These models do not access original message content in DMs, private channels, or public channels to make these suggestions. And we do not build or train these models in such a way that they could learn, memorize, or be able to reproduce customer data.

- We do not develop LLMs or other generative models using customer data.

- Slack uses generative AI in its Slack AI product offering, leveraging third-party LLMs. No customer data is used to train third-party LLMs.

- Slack AI uses off-the-shelf LLMs where the models don't retain customer data. Additionally, because Slack AI hosts these models on its own AWS infrastructure, customer data never leaves Slack's trust boundary, and the providers of the LLM never have any access to the customer data.

Note that we have

not changed our policies or practices – this is simply an update to the

language to make it more clear. For full details on our updated AI

privacy principles, see:

https://slack.com/trust/data-