NVIDIA Unveils Tesla P4 And P40 Deep Learning Accelerators, Mini DRIVE PX 2 For Autonomous Vehicles

These GPUs feature specialized inference instructions based on 8-bit (INT8) operations, delivering 45x faster response than CPUs and a 4x improvement over GPU solutions launched less than a year ago, NVIDIA says. The systems that these GPUs will end up in power modern artificial intelligence services, such as voice-activated assistance, email spam filters, and movie and product recommendation engines. According to NVIDIA, these types of applications require 10x more compute power compared to neural networks from last year.

"Current CPU-based technology isn't capable of delivering real-time responsiveness required for modern AI services, leading to poor user experiences," NVIDIA says.

The Tesla P4 is a low-profile PCI Express graphics card that can fit in virtually any server. Power consumption starts at just 50W, making it 40x more energy efficient than CPUs for inferencing in production workloads. It sports 5.5 teraFLOPS of single-precision performance and 22 TOPS of INT8 operations, and has 8GB of onboard GDDR5 memory for 192GB/s of memory bandwidth.

"A single server with a single Tesla P4 replaces 13 CPU-only servers for video inferencing workloads, delivering over 8x savings in total cost of ownership, including server and power costs," NVIDIA boasts.

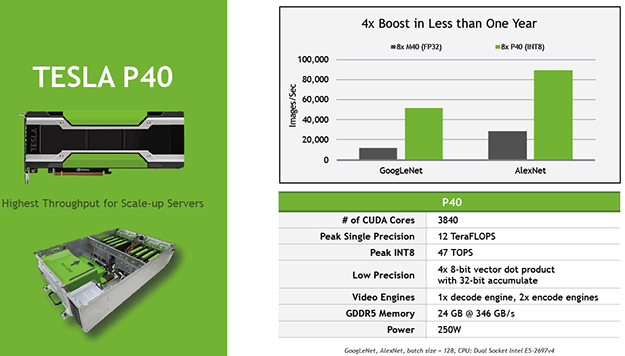

The Tesla P40 is a bigger, meatier solution. This full-height, dual-slot graphics accelerator delivers 12 teraFLOPS of single-precision performance and 47 TOPS of INT8 operations. It also triples the onboard memory to 24GB for 364GB/s of memory bandwidth. Part of NVIDiA's sales pitch is that if you slap eight of these things in a server, you can replace the performance of more than 140 CPU servers. If you figure a CPU server costs $5,000, you're looking at a significant savings of $640,000 in server acquisition cost.

"With the Tesla P100 and now Tesla P4 and P40, NVIDIA offers the only end-to-end deep learning platform for the data center, unlocking the enormous power of AI for a broad range of industries," said Ian Buck, general manager of accelerated computing at NVIDIA. "They slash training time from days to hours. They enable insight to be extracted instantly. And they produce real-time responses for consumers from AI-powered services."

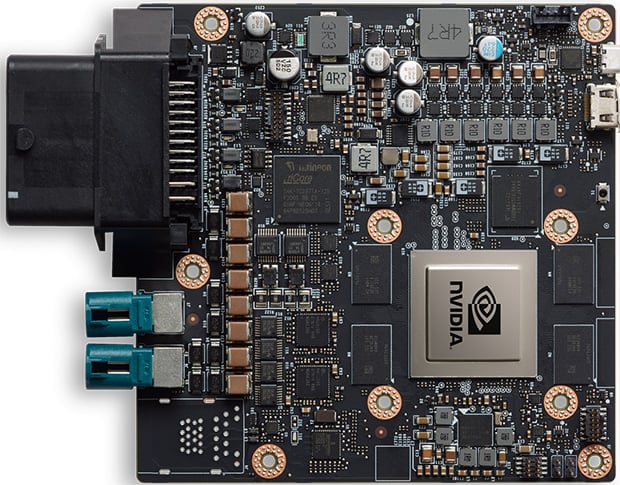

In addition to a pair of new Tesla accelerators, NVIDIA unveiled a smaller version of the DRIVE PX 2, a palm-sized, energy-efficient AI platform that automakers can use to power automated and self-driving vehicles for driving and HD mapping.

The DRIVE PX 2 features NVIDIA's newest system-on-chip (SoC) with Pascal-based graphics. It can process inputs from multiple cameras, plus lidar, radar, and ultrasonic sensors. Power consumption is rated at just 10W.

"Bringing an AI computer to the car in a small, efficient form factor is the goal of many automakers," said Rob Csongor, vice president and general manager of Automotive at NVIDIA. "NVIDIA DRIVE PX 2 in the car solves this challenge for our OEM and tier 1 partners, and complements our data center solution for mapping and training."

The DRIVE PX 2 will be available to production partners in the fourth quarter of 2016. As for NVIDIA's new Tesla parts, the Tesla P40 will be available in the October and the Tesla P4 in November. No word yet on pricing.