Meta's Ray-Ban Smart Glasses Gain AI To See And Identify Everything You See

Meta chief technology officer Andrew "Boz" Bosworth dropped a video post on Instagram lauding a new multimodal AI upgrade to its second-generation Ray-Ban smart glasses. The new Meta AI is slated for public launch in 2024, but that hasn't stopped Boz from raving about the AI assistant's ability to help you find and use information that you see through the glasses.

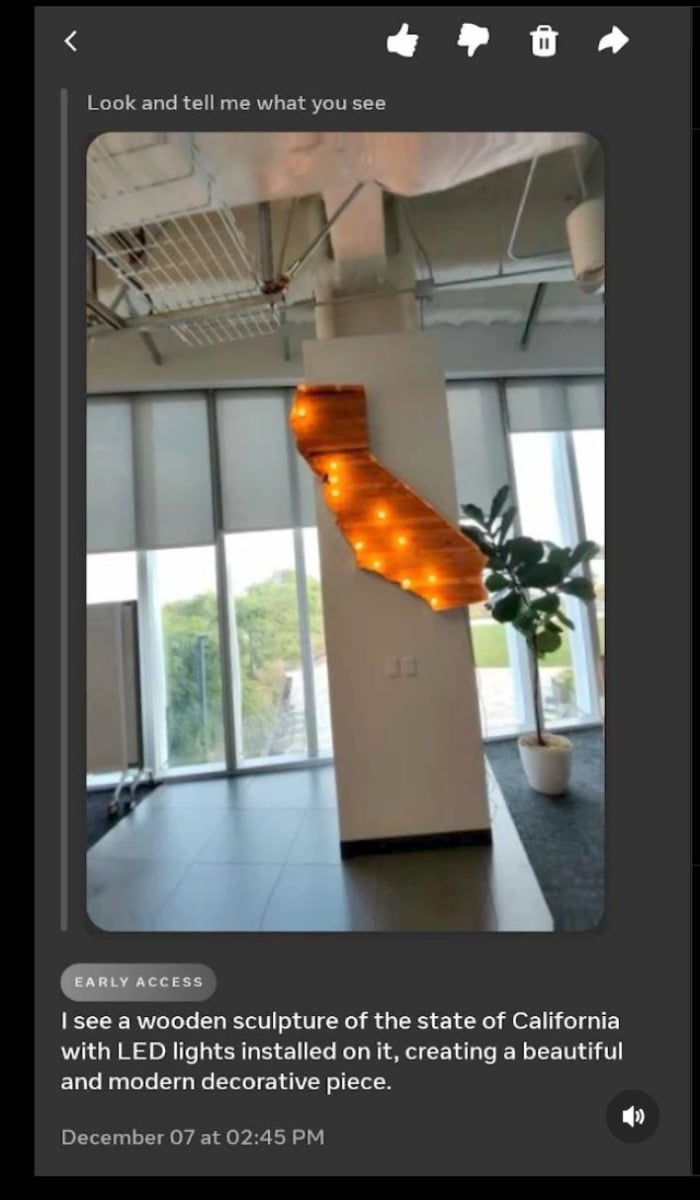

In the post, Boz shows off a video of him looking at a piece of wall art with what could be the AI UI (say that five times really fast). The Meta AI responded to Boz's question "Look and tell me what you see" by stating that the wall art as "a wooden sculpture of the state of California with LED lights installed on it." Going one step further, AI describes the piece as "beautiful". It probably won't say the same if it was a picture of yours truly.

At the moment, operating these glasses is completely done by voice via an initial hotword "Hey Meta," followed by an open ended question or command. This would be similar to someone using a standard Amazon Alexa or Google Assistant, but with a more open and fluid conversation pattern. The glasses will then combine what it sees with the onboard cameras with the spoken command into an AI-generated response or action.