Intel's Loihi 2 Processor Gets Even Faster At Computing AI Workloads Like A Human Brain

The idea of neuromorphic computing isn't completely new. The concept of building a computer that "works like a brain" existed in science fiction in the early part of the last century. The real origins of modern neuromorphic engineering date back to the 1980s, however. Still, it wasn't until 2006 that someone tried to actually create a neuromorphic processor, and it wasn't until IBM's TrueNorth in 2014 that anyone succeeded in scaling out such a chip to a semblance of usable densities.

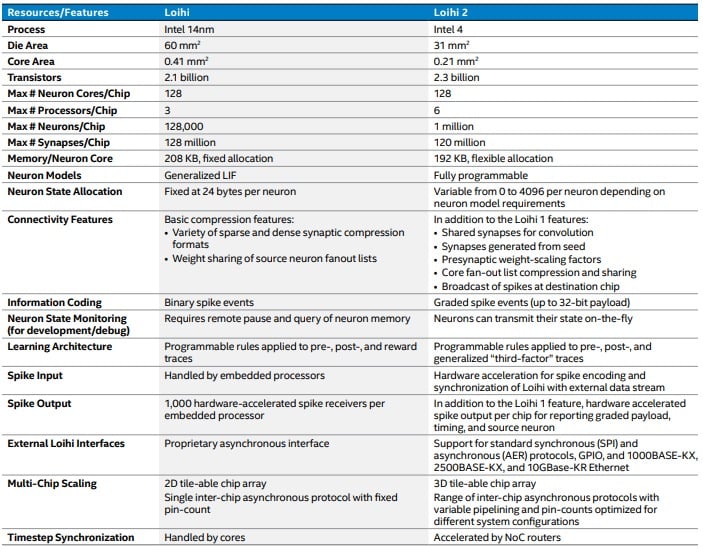

The first-generation Loihi processor was actually the fifth-generation neuromorphic chip designed by Intel Labs, Intel's research arm. It was initially produced in low quantities on the 14nm node and offered in units as small as a two-chip USB stick to researchers. Eventually, Intel scaled Loihi 1 out to 768-node racks, with each chip containing a trio of low-power Lakefield x86 cores and 128 "neuron cores". Each of those represents 131,072 artificial neurons with 208 bytes of memory apiece, all connected together with some 128 million artificial synapses.

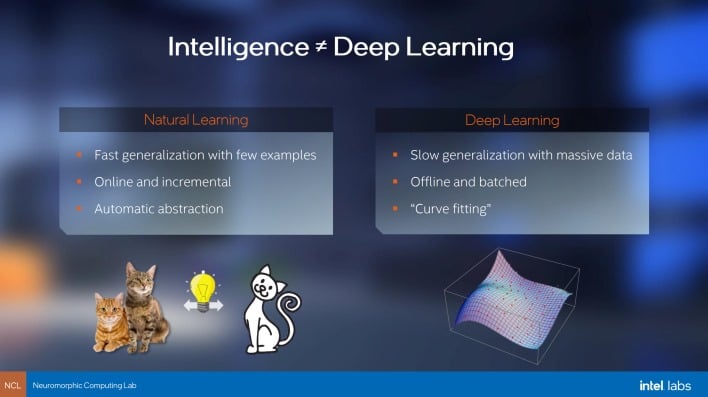

It's a fundamentally different approach to computer processing than what we're used to, but it's really rather similar to how the brain works. That similarity can give neuromorphic computers a tremendous advantage in certain types of workloads, much like—but wholly unrelated to—the way quantum computing can make quick work of otherwise-impossible computations.

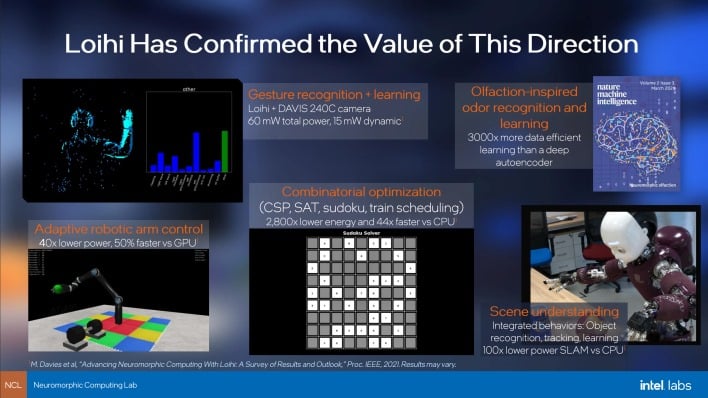

If we take Intel and its partners at their word, the first-generation Loihi chips have already proven the potential of this technology. They say that Loihi is 3000x as data-efficient at odor recognition and learning compared to a traditional deep autoencoder, 44x faster than a CPU at combinatorial optimization, and that it offers 150% the performance of GPU inferencing when performing adaptive robotic arm control. Oh, and it does all this while drawing "far less than 1 watt" of power.

It's not just about density, though. Intel's new neuromorphic chip has considerable architectural improvements over the last-generation part, too. The company says that its new baby supports integer magnitudes on the spikes whereas Loihi only supported binary spikes; that allows for greater workload precision. The new chip's neurons also support a handful of microcode instructions that give it more direct programmability than the original part. Plus, it's just faster at doing the same things: depending on what specific part of the circuit we're talking about, Loihi 2 operates between twice and ten-times as fast as the original part. This accelerates everything the chip does in a similar fashion to a clock speed ramp on a regular CPU.

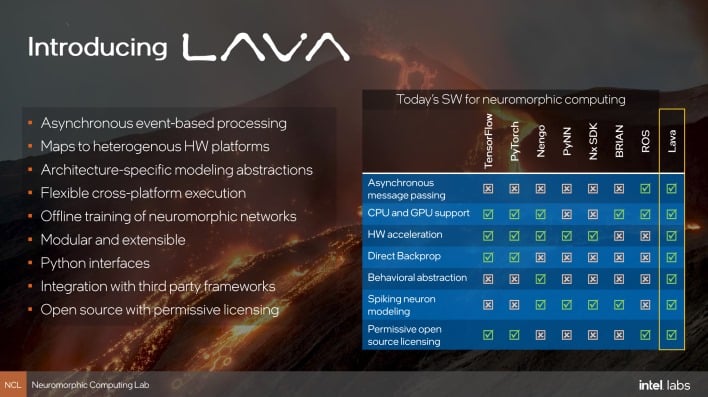

Intel's other big reveal for the day is the all-new Lava open-source neuromorphic computing framework. One of the big challenges that Intel and its research partners kept butting into with the original Loihi chips was the lack of software that could work with the the highly-proprietary and experimental nature of the hardware. Hoping to advance the field of neuromorphic computing as a whole, Intel has specifically designed its Lava framework to be platform-agnostic; the company says it has intentionally structured the code so that it is not tied to its own neuromorphic chips.

Additionally, Lava is fully open-source. Intel says it wants to encourage convergence in the field to avoid the current situation with traditional artificial neural networks that comprises an arbitrary mess of frameworks and tools that have varying feature sets and capabilities. It's also extensible; it can be interfaced with extant frameworks like YARP, TensorFlow, PyTorch, and more. Lava itself is entirely used with Python from the user side of things, but Intel does note that its libraries use optimized C, CUDA, and OpenCL code where suitable.

For now, Intel only offers Loihi 2 hardware to researchers, and only in two forms: the Oheo Gulch single-chip add-in-card that comes with an Arria 10 FPGA for interfacing with Loihi 2, and soon, the stackable Kapoho Point board that mounts eight Loihi 2 chips in a 4x4-inch form factor. Kapoho Point will have GPIO pins as well as "standard synchronous and asynchronous interfaces" that will allow it to be used with things like sensors and actuators for embedded robotics applications, as one example. The company says larger-scale systems remain in development.

The way Intel expects most partners to use Loihi 2, at least for now, is through the Neuromorphic Research Cloud, or NRC—not to be confused with the Neuromorphic Research Community, also called "NRC". To be clear, you have to join the NRC before you can use the NRC. Fortunately, membership is free and "open to all qualified academic, corporate, and government research groups around the world." No doubt due to the substantial research investment Intel has poured into these parts, the company says its goal is to "nurture a commercial neuromorphic ecosystem." Due to that, members are encouraged to share their work with the NRC, but it's not a hard requirement.

If you're an artificial intelligence researcher and you want to sign up for the NRC, head to this page and click the "please email us" link at the bottom. And if you have any thoughts on Loihi 2, we'd love for you to comment below.