Google Translate Has Reached Human-Like Accuracy Thanks To Neural Machine Translation Engine

It's been a decade since Google introduced Google Translate powered by its Phrase-Based Machine Translation (PBMT) algorithm. There's been a lot that's happened since then, particularly with regards to speech and image recognition, but machine translation has presented unique challenges and hasn't advanced quite as fast. Google hopes to change that with the introduction of a new neural machine translation (NMT) system that uses bleeding edge techniques to bring about the biggest improvements ever in the quality of machine translation.

There's a big difference between PBMT and NMT. The former breaks input sentences into words and phrases that are independently translated, whereas the latter looks at the entire input sentence as a single unit for translation.

"The advantage of this approach is that it requires fewer engineering design choices than previous Phrase-Based translation systems. When it first came out, NMT showed equivalent accuracy with existing Phrase-Based translation systems on modest-sized public benchmark data sets," Google explains. "Since then, researchers have proposed many techniques to improve NMT, including work on handling rare words by mimicking an external alignment model, using attention to align input words and output words, and breaking words into smaller units to cope with rare words."

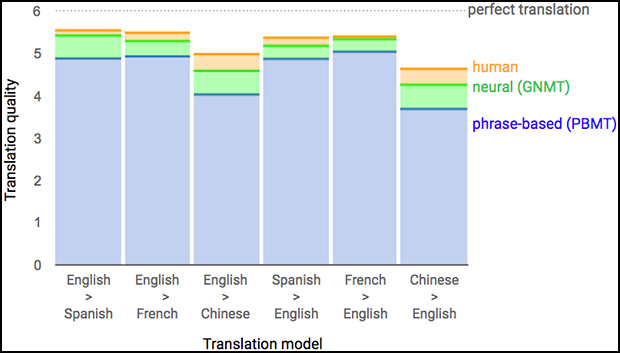

At the outset, Google says NMT didn't have the necessary speed or accuracy to power a service like Google Translate. Fast forward to today and Google is confident in what it's built, saying that it's now sufficiently fast and accurate enough to spit out better translations. More specifically, Google says its new method reduces translation errors by more than 55-85 percent.

"Our model consists of a deep LSTM [long short-term memory] network with eight encoder and eight decoder layers using residual connections as well as attention connections from the decoder network to the encoder. To improve parallelism and therefore decrease training time, our attention mechanism connects the bottom layer of the decoder to the top layer of the encoder," Google stated in a white paper (PDF) explaining the technology.

"To accelerate the final translation speed, we employ low-precision arithmetic during inference computations. To improve handling of rare words, we divide words into a limited set of common sub-word units ('wordpieces') for both input and output. This method provides a good balance between the flexibility of 'character'-delimited models and the efficiency of 'word'-delimited models, naturally handles translation of rare words, and ultimately improves the overall accuracy of the system," Google added.

There's still a long way to go, and for now the technology is limited to translating Chinese into English. As Google readily admits, "machine translation is by no means solved." However, NMT is a significant milestone towards that ultimate goal.