Google AI Learns Subjective Task Of Editing Professional Level Photography

We talked yesterday of an example of how deep learning and artificial intelligence can be used to put words in people's mouths, creating video proof of something someone said, even if they didn't really say it. Prospects like that are downright scary, but so too are the realities of the jobs AI will be able to take away from humans.

Case in point: professional photography editing. This is a bit of an odd one, as most photographers will edit their own photos, so maybe we should consider this an example of how AI could help someone get through their workflow more efficiently. And perhaps even deliver a better result in the end.

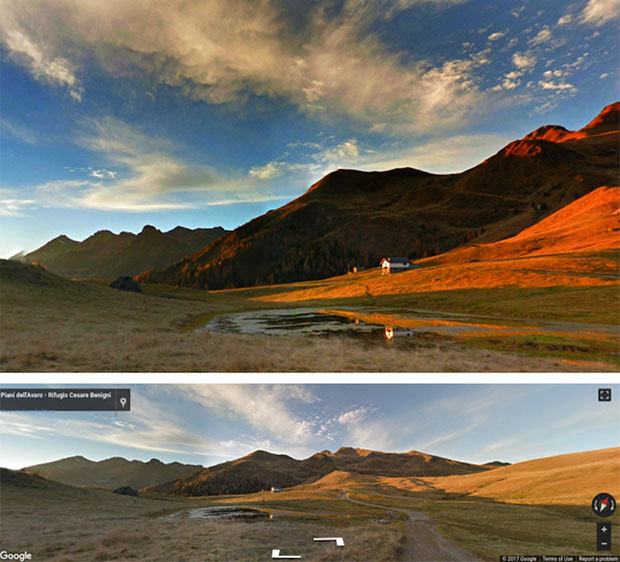

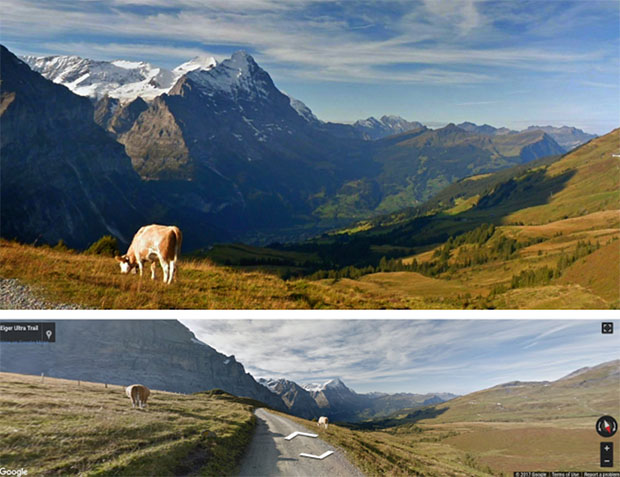

Using AI to apply a variety of filters to a photo and calling it a day isn't good enough; you also need AI that will analyze the resulting images and gauge their worth. That was taken care of in Google's research, although whether or not the AI did a good job will be subjective. Here are some examples:

The reason the results are as good as they are is because the software is smart enough to analyze images by sections, rather than apply some blanket filter over the entire frame. Some parts of a photo might need more attention than others, for example, with regards to saturation and HDR. On top of that, a "Dramatic Mask" effect is applied, enhancing shadows and lighting, as well as the color.

When professional photographers were brought in to gauge the level of talent of the person (in this case, AI) editing the photos, 40% of the images were ranked as semi-pro or pro. That's impressive in itself, but as with all things deep learning, the smarts behind the tech will only get better as time goes on.