NVIDIA Unveils Powerful Blackwell GPU Architecture For Next-Gen AI Workloads At GTC

Some people have claimed that AI is a fad, or a flash in the pan. It's easy to understand the perspective; the hype around the explosion of generative AI feels similar to the hype around previous fads, like 3D TVs or the metaverse. AI isn't a fad, though. It is already transforming every single part of the tech industry, and it's about to start renovating the rest of the world, too. Right at the forefront of the AI revolution is NVIDIA, of course.

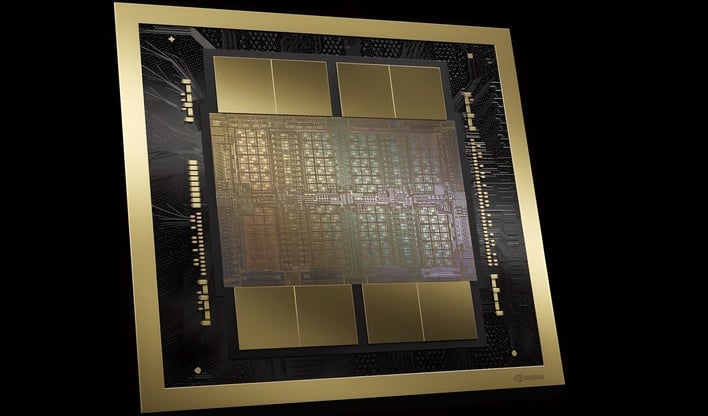

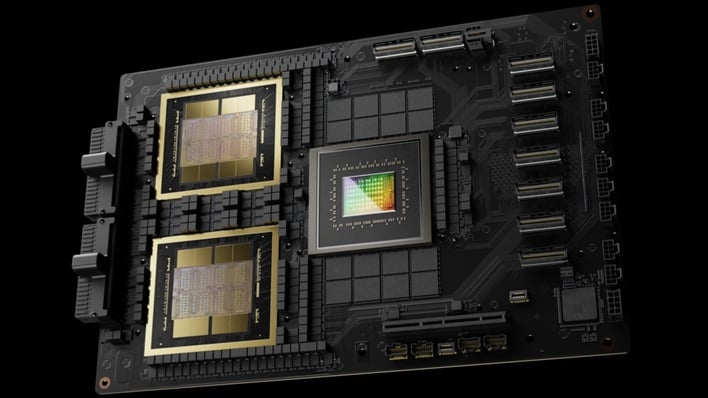

At GTC tonight, NVIDIA CEO Jensen Huang got on stage in his trademark leather jacket to make a multitide of announcements. Arguably the most important of them were the reveal of the Blackwell GPU architecture as well as NVIDIA's first-party systems that it will power. The image at the top is of the Blackwell-based B200 Tensor GPU, while the "GB200" moniker applies primarily to the GB200 Superchip, pictured here:

NVIDIA's Blackwell GPU Architecture Revealed

The GB200 includes a pair of Blackwell GPUs mated both to each other and to a 72-core "Grace" CPU. The Blackwell GPUs have their own HBM3e memory, up to 192 GB apiece, while the Grace CPU can have up to 480GB of LPDDR5X memory attached. The whole thing is connected with the new 5th-generation NVLink, but we'll talk more about that in a bit. First, let's look a little deeper at the Blackwell GPU itself.NVIDIA has complained for a few generations about being limited to the size of the reticle of TSMC's lithography machines. How did they work around this? As it turns out, the answer is to link two chips together with a new interface. The full-power Blackwell GPU is indeed two "reticle-sized dies" attached with the proprietary "NV-HBI" high-bandwidth interface. NVIDIA says that the connection between the two pieces of silicon operates at up to 10 TB/second and offers "full performance with no compromises."

The house that GeForce built makes many bold claims about Blackwell's capabilities, including that it can train new AIs at four times the speed of Hopper, that it can perform AI inference at thirty times the speed of the previous-generation parts, and that it can do this while improving energy efficiency by 2500%. That's a 25x improvement, if you do the math.

Of course, if you're doing something 30 times faster and your energy efficiency went up by 25 times, then you're actually using more power, and that's indeed the case here. Blackwell can draw up to 1,200 watts per chip, which is what happens when you increase the transistor count by 2.5x over the previous generation without a die shrink. Blackwell is built on what NVIDIA calls "4NP", a customized TSMC process tailored specifically for NVIDIA's parts. While it's likely to be improved over the "4N" process used for Hopper, it doesn't seem to be a compelte overhaul, so what NVIDIA has achieved is impressive to be sure.

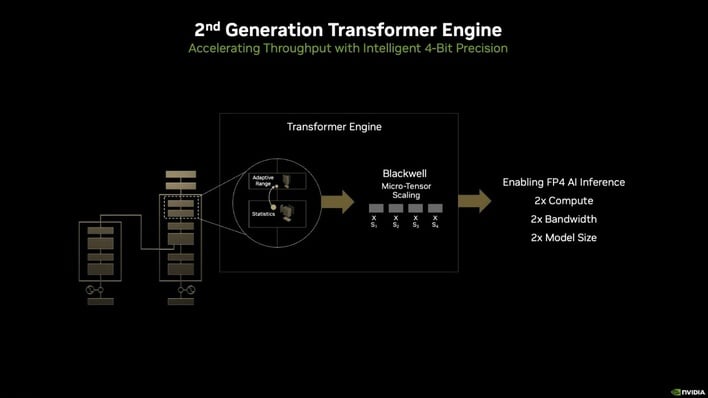

NVIDIA says that there are six primary breakthroughs that have enabled Blackwell to become "a new class of AI superchip." These breakthroughs are the extraordinarily high transistor count, the second-generation transformer engine, the 5th-generation NVLink, the new Reliability, Availability, and Serviceability (RAS) Engine, additional capabilities for "Confidential Computing" including TEE-I/O capability, and a dedicated decompression engine capable of unpacking data at 800 GB/second.

Interestingly, at this early stage, NVIDIA isn't talking the usual GPU specs. Things like CUDA core count, memory interface size and speed, clock rates, and so on—weren't part of the initial unveil. Usually when a company declines to share specifications like that, it's because they're unimpressive or misleading, but we doubt that's the case here. It seems more like NVIDIA simply wanted to emphasize other details.

We do know that the basis of Blackwell's massive AI performance is a heavily-revised tensor core and "transformer engine." The new second-generation transformer engine allows NVIDIA to support the FP4 data type on its GPUs, which, on AI models so-optimized, could offer double the throughput compared to models quantized to 8-bit precision. Not every model can be quantized to 4-bit, but for those that can, it theoretically offers double the performance in half the memory usage.

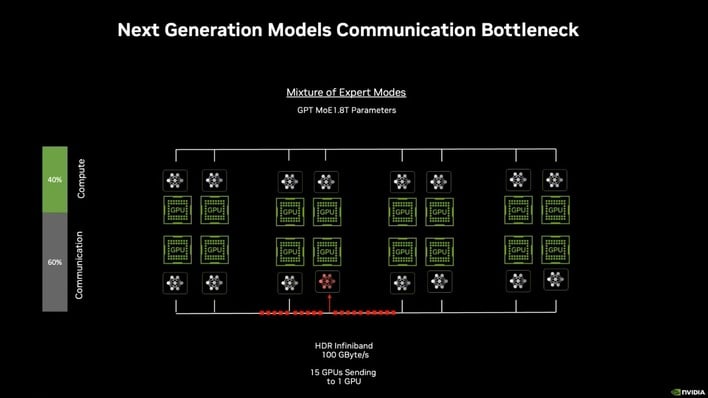

One of the interesting things that NVIDIA noted is that for the latest AI models, which use a "mixture of experts" topography, where multiple smaller models collaborate on a problem, as much as 60% of your time can be spent on communications instead of compute that solves the necessary work. In an effort to resolve this problem, NVIDIA is introducing new connection types—both NVLink 5 as well as new 800 Gb/s networking hardware.

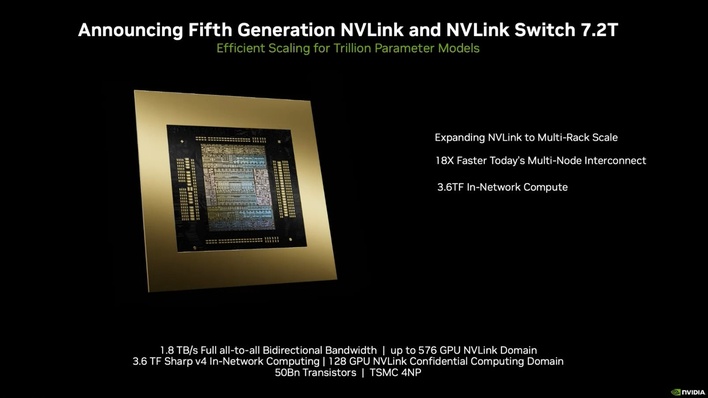

First up: NVLink 5 and the new NVLink Switch 7.2T. NVIDIA says that NVLink 5 doubles the performance of the previous generation, but that Blackwell also has double the number of connections compared to Hopper. That gives it a total of 1.8 TB/second of total bandwidth, or 900 GB/second unidirectional. It can connect up to 72 GPUs together into a single NVLink domain, meaning that those 72 chips can be utilized as a single GPU. A configuration set up in such a way is already offered by NVIDIA, and it's known as GB200 NVL72.

The NVIDIA GB200 NVL72 And New Blackwell-Based DGX SuperPOD

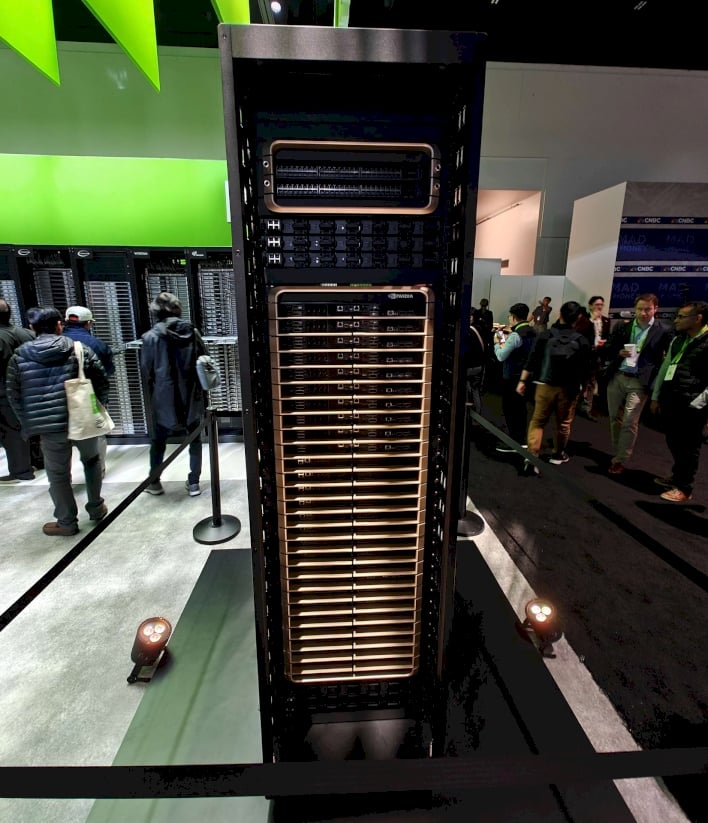

The NVIDIA GB200 NVL72 is an entire 19" rack that behaves as a single GPU. The GB200 NVL72 comprises 36 Grace CPUs and 72 Blackwell GPUs, with a total of 13.8 TB of HBM3e memory and peak AI inference performance of 1.44 EFLOPs. That's "E" or "exa-", or 1,000 petaflops; one million teraflops. As Jensen noted on stage, there are only a handful of computers in the entire world that can approach the exaflop range, and here's a single rack server offering that kind of performance. It will be fascinating to see if Blackwell holds up to NVIDIA's claims.

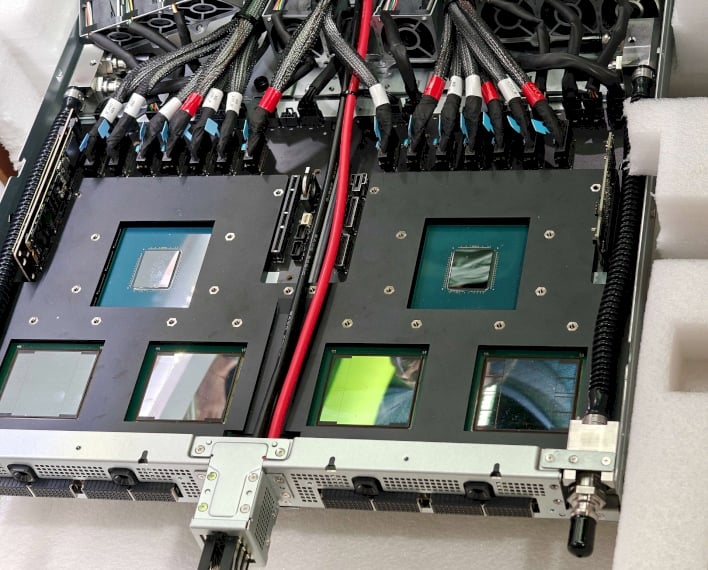

This fascinating rear view of the GB200 NVL72 offers a glimpse of what allows it to be so fast: bundled cables carrying the 130 TB/second of NVLink bandwidth that connects the machines. To handle this amount of throughput, NVIDIA had to create a specialized NVLink Switch processor (with its own 50 billion transistors). That chip has its own 3.6 TFLOPS of built-in compute just to handle the work of routing traffic across its 72 dual 200 Gb/sec SerDes ports.

One of the biggest changes from Blackwell to Hopper is that Blackwell is intended to be used with liquid cooling. This has a lot of advantages and it's a big part of why NVIDIA says Blackwell is so much cheaper to operate. Liquid cooling means that the datacenter itself doesn't require massive air conditioning, and it also allows the racks to be denser. Incredibly, the GB200 Superchip Compute Tray and the NVLink Switch Trays both use the 1U form factor, as you can see above. The full GB200 NVL72 rack has 18 Superchip Compute trays and 9 switch trays.

Of course, the GB200 Superchip and the GB200 NVL72 aren't the only ways to get access to Blackwell. While NVIDIA didn't talk about it at its presentation, NVIDIA will also be offering x86-based "HGX" servers that are optimized for rapid deployment or even, in the case of the HGX B100, drop-in replacement compatibility with existing HGX H100 systems. There aren't many details about the hardware yet, but these systems will come with x86-compatible CPU of some form mated to a baseboard with eight B100 GPUs at either a 1-kW (HGX B20) or 700-watt (HGX B100) power level.

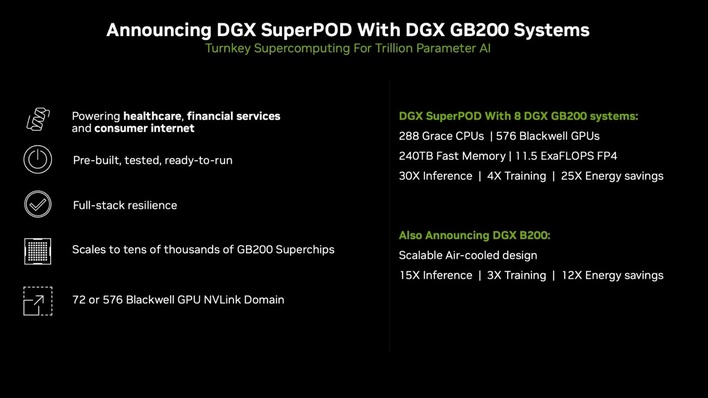

There's also the DGX SuperPOD if you happen to be a multi-billion dollar corporation or government looking for absolutely massive HPC performance. The DGX SuperPOD GB200 comes in a pre-configured "turnkey" form with eight DGX GB200 machines giving you a total of 576 Blackwell GPUs, 240TB of RAM, and the ability to scale up with additional SuperPOD units if need be. There's also an air-cooled version called "DGX B200" (missing the "G") if you prefer.

NVIDIA GTC 2024 Is About More Than Blackwell

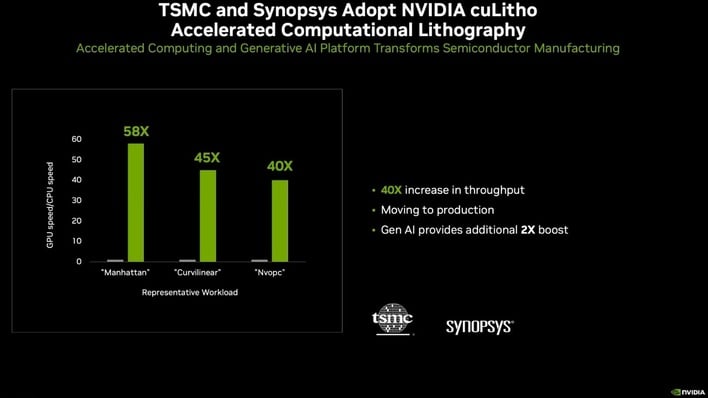

Beyond Blackwell, NVIDIA had many more announcements at the show. We're not going to go over all of them here for the sake of brevity, but we'll note some of the more interesting ones. Jensen noted that the company's cuLitho product for accelerated computational lithography has entered service with TSMC and Synopsys, offering a 40x increase in production throughput for that stage of the manufacturing process. As we reported previously, cuLitho uses GPUs (and now, AI) to accelerate the layout process, which is apparently compute-intensive.

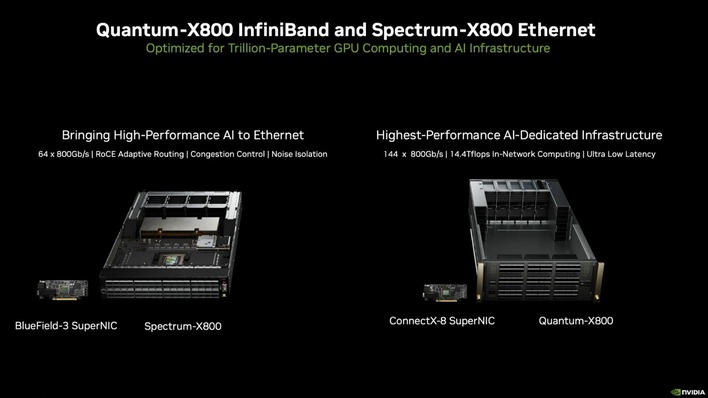

As we noted before, NVIDIA also announced that it's bringing out new hardware to support 800-Gbps transfer rates for both Ethernet and Infiniband. The BlueField-3 SuperNIC and Spectrum-X800 offer up to sixty-four 800-Gbps Ethernet connections, while the ConnectX-8 SuperNIC and Quantum-X800 can handle 144 of the same using Infiniband. NVIDIA says that the Quantum-X800 has 14.4 TFLOPS of in-network compute, which is pretty crazy.

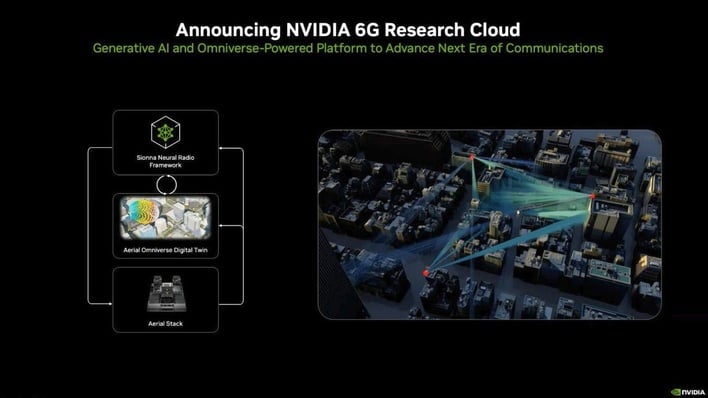

As usual, NVIDIA talked quite a bit about Omniverse and the use of "digital twins" at the presentation. If you're not familiar with the concept of a "digital twin", it's essentially a detailed physical simulation of a real-world environment used to simulate workflows or new construction within NVIDIA's Omniverse. One such digital twin that the company showed off was the NVIDIA 6G Research Cloud that is apparently being used to plan the deployment of next-generation wireless communications.

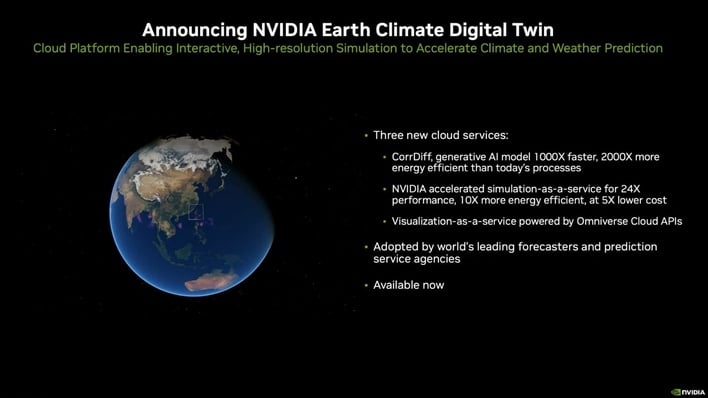

Another digital twin that is in wide use already is NVIDIA's Earth Climate Digital Twin. This model simulates the entire planet's weather systems down to a resolution of 2 kilometers, according to Jensen Huang, and should allow weather agencies to predict the weather both more accurately and also with greater precision. It's quite a project, and the company says that it is already in use by the "world's leading forecasters."

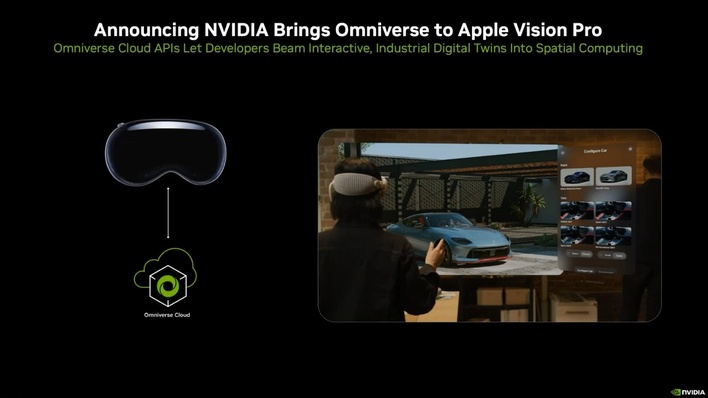

Also on the topic of Omniverse, the company quickly announced that its networked simulator technology would be adding support for Apple's Vision Pro headset and its so-called "spatial computing." We jest, but the idea of interacting with digital twins in real space using augmented reality could be incredibly powerful, and the Vision Pro is probably the best product on the market for doing exactly that, so it's really a match made in heaven.

Finally, NVIDIA talked about its Jetson Thor application processors for automotive and robotics uses. The company has apparently trained a new AI model for humanoid robots known as GR00T, in a cute nod to the fan-favorite tree man from Marvel's Guardians of the Galaxy. GR00T is a multi-modal foundational AI model for "embodied AI", which is basically a way to describe AI that controls physical devices (including robots) as opposed to AI that simply runs inside a computer.

NVIDIA says that, thanks to the Thor SoC (which includes an 800-TFLOP Blackwell GPU), GR00T is capable of things like understanding natural language and "emulating movements by observing human actions," learning skills from human trainers to navigate and interact with the real world. The on-stage demo with a couple of small robots wasn't particularly convincing, but it was pretty cute, at least.

NVIDIA had loads more announcements at GTC and we may be covering more of them in detail. If you're interested in hearing about (or reading an explainer on) any of NVIDIA's specific announcements, let us known in the comments below.