NVIDIA GeForce 8800 GTX and 8800 GTS: Unified Powerhouses

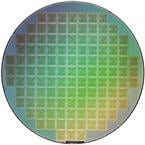

A couple of weeks ago at an Editor's Day event at their headquarters in Santa Clara, California, NVIDIA proclaimed that they planned to "redefine reality" with their self-branded ultimate gaming platform. The products in the spotlight throughout the event were the new nForce 680i SLI chipset, the upcoming 680a SLI, and a new family of graphics cards built around the company's forthcoming DX10 capable G80 GPU.

The G80 at the heart of what became known as the GeForce 8800 GTX and 8800 GTS represented a complete shift in NVIDIA's GPU architecture. NVIDIA's Tony Tomasi even went so far as to say, "every transistor in the chip is new". Of course NVIDIA would leverage some technology from previous generations of products, but the DX10 compliant, Unified architecture of the G80 is a major departure from the G70 GPU and its derivatives that power cards in the GeForce 7 series of products.

After hearing what NVIDIA had to say about the G80 and new nForce chipsets over the course of the event, the idea that the company had designed and built the ultimate gaming platform seemed like a distinct possibility, even for staunch PC enthusiast critics like us. We of course wouldn't pass judgment until we had the products to test for ourselves, however. Fortunately, the nForce 680i SLI and GeForce 8800 GTX and GTS were ready for testing almost immediately, and today we can tell you all about them.

We've talked at great length about the new nForce 600 series of chipsets, and more specifically about the nForce 680i SLI in this article here. And in this showcase and evaluation we'll be presenting you with information regarding NVIDIA's flagship GeForce 8800 GTX and 8800 GTS. Strap in folks. It's going to be a wild ride.

|

|

NVIDIA GeForce 8800 Series |

| Features & Specifications |

|

NVIDIA unified architecture: Fully unified shader core dynamically allocates processing power to geometry, vertex, physics, or pixel shading operations, delivering up to 2x the gaming performance of prior generation GPUs.

Full Microsoft DirectX 10 Support:

NVIDIA SLI Technology:

NVIDIA Lumenex Engine:

128-bit floating point High Dynamic-Range (HDR):

NVIDIA Quantum Effects Technology:

NVIDIA ForceWare Unified Driver Architecture (UDA):

OpenGL 2.0 Optimizations and Support:

NVIDIA nView Multi-Display Technology:

PCI Express Support:

Dual 400MHz RAMDACs:

Dual Dual-link DVI Support:

Built for Microsoft Windows Vista:

NVIDIA PureVideo HD Technology:

Discrete, Programmable Video Processor: |

Hardware Decode Acceleration:

HDCP Capable:

Spatial-Temporal De-Interlacing:

High-Quality Scaling:

Inverse Telecine (3:2 & 2:2 Pulldown Correction):

Bad Edit Correction:

Video Color Correction:

Integrated SD and HD TV Output:

Noise Reduction:

Edge Enhancement: |

The GeForce 8800 GTX and GTS are based on a totally new unified GPU architecture, so they don't have too much in common with the older GeForce 7 series of products. It would be a good idea to familiarize yourself with NVIDIA's previous product offerings, and their platform as whole, however. For a comprehensive look at the main features of the GeForce 7 series, and for more details regarding NVIDIA's multi-GPU SLI platform, we recommend taking a look at a few of our recent articles...

- NVIDIA nForce 680i SLI

- NVIDIA GeForce 7950 GX2 & Forceware Rel. 90

- NVIDIA GeForce 7900 GTX / GT & 7600 GT

- NVIDIA GeForce 7800 GTX (G70) Launch

- NVIDIA's PureVideo Technology

- NVIDIA's Multi-GPU Strategy: SLI

We know that's a lot of reading, but the information and performance data in the articles listed above will give you much of the background and architectural details necessary to better understand the new products being announced today. If you're unclear about anything on the proceeding pages, look back to these articles for more related details.