XFX GeForce 6200TC

Without question, it is typically far more interesting to read about the latest flagship $600 graphics card than it is to hear about a budget card's performance. Unfortunately, the vast majority of us doesn't have the luxury of being able to afford those high-end cards and must instead focus our attention on some more realistic alternatives. In the past, having a mainstream-level card would mean lackluster performance at best in most games with little advantages over even the despised integrated graphics solutions.

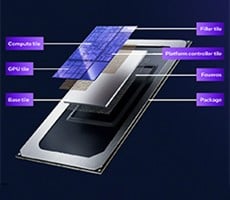

With the launch of the GeForce 6200 back in December 2004, NVIDIA brought key new features such as Shader Model 3.0 and TurboCache to the entry-level gamer. By moving towards the TurboCache architecture and efficiently utilizing system memory, less memory needs to be natively installed on the card. This dramatically lowers the overall cost to produce the card making prices cheaper overall and more offering more headroom for the higher-end features mentioned above. As seen here in our initial review of the GeForce 6200, the card had impressive performance considering its sub $100 price. Months later, we're taking a look at XFX's implementation of the GeForce 6200 GPU and seeing how the TurboCache architecture fares in the latest crop of games.

|

| GPU: GeForce 6200 Graphics Bus: PCI Express Memory size: supporting 128MB* GPU/Memory clock: 350 / 700 MHz Memory Interface: 128-bit Effective Memory Bandwidth(GB/sec): 13.6 Fill Rate(billion texels/sec): 1.4 Vertices/sec (million): 263 Pipeline: 4 RAMDACs (MHz): 400 Process: 0.11 micron Output: S-Video, DVI, VGA * System memory must be 512MB or higher. NVIDIA TurboCache technology Shares the capacity and bandwidth of dedicated video memory and dynamically available system memory for turbo charged performance and larger total graphics memory. PCI Express support Designed to run perfectly with the next-generation PCI Express bus architecture. This new bus doubles the bandwidth of AGP 8x delivering over 4GB/s in both upstream and downstream data transfers. |

Microsoft DirectX 9.0 Shader Model 3.0 support Ensures top-notch compatibility and performance for all DirectX 9 applications, including Shader Model 3.0 titles. NVIDIA CineFX 3.0 engine Powers the next generation of cinematic realism. Full support for Microsoft DirectX 9.0 Shader Model 3.0 enables stunning and complex special effects. Next-generation shader architecture delivers faster and smoother game play. NVIDIA UltraShadow II technology Enhances the performance of bleeding-edge games, like id Software's Doom III, that feature complex scenes with multiple light sources and objects. Second-generation technology delivers more than 4x the shadow processing power over the previous generation. NVIDIA Intellisample 3.0 technology The industry's fastest antialiasing delivers ultra-realistic visuals, with no jagged edges, at lightning-fast speeds. Visual quality is taken to new heights through a new rotated grid sampling pattern. NVIDIA PureVideo technology The combination of the GeForce 6 Series GPU's high-definition video processor and NVIDIA video decode software delivers unprecedented picture clarity, smooth video, accurate color, and precise image scaling for all video content to turn your PC into a high-end home theater. NVIDIA ForceWare Unified Driver Architecture (UDA) Delivers rock-solid forward and backward compatibility with software drivers. Simplifies upgrading to a new NVIDIA product or driver because all NVIDIA products work with the same driver software. NVIDIA nView multi-display technology Advanced technology provides the ultimate in viewing flexibility and control for multiple monitors. NVIDIA Digital Vibrance Control 3.0 technology Allows the user to adjust color controls digitally to compensate for the lighting conditions of their workspace, in order to achieve accurate, bright colors in all conditions. OpenGL 1.5 optimizations and support Ensures top-notch compatibility and performance for all OpenGL applications. |

Given the GeForce 6200's sub-$100 price tag, it's no surprise to see that the card comes with rather minimalist packaging. Opening the box, one finds the bare essentials ranging from a driver CD and user manual to an S-Video cable and the card itself.