OCZ IBIS HSDL Solid State Drive Preview

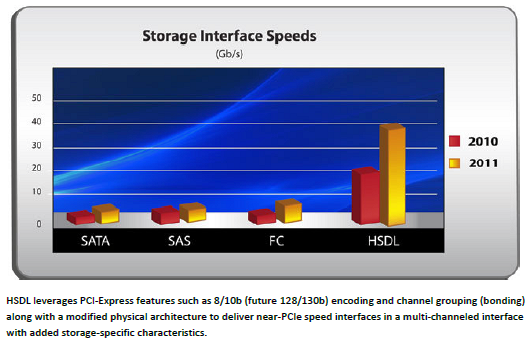

The chart above notes the new interface's bandwidth advantage over existing technologies such as 6Gbps SATA, Serial Attached SCSI and Fibre Channel. There's no question 10Gbps is boatload of bandwidth and in 2011 OCZ claims they'll be able to double that.

The implementation for the IBIS drive we're testing here is a single HSDL connection to a single port HSDL X4 PCI Express adapter card. We'll look at the hardware level technologies employed shortly but, at a high level, the HSDL interface is comprised of 4 LVDS (Low Voltage Differential Signaling) pairs (8 total) bonded together in a single channel. High speed LVDS pairs are used in a myriad of serial interconnect technologies from HyperTransport, to Firewire, SCSI, SATA, RapidIO and of course good ol' PCI Express. LVDS is simply the physical medium to transmit a low voltage signal over copper. OCZ reports that the HSDL interface utilizes an 8/10b bit encoding scheme much like PCI Express to transmit its data and also utilizes a PCI Express logic layer, so it's compatible with existing PCI Express architectures.