MIT Unveils Gen AI Tool That Generates High Res Images 30 Times Faster

AI image generators typically work through a process known as "diffusion". Basically, the model that's generating the image starts with a very vague, blurry picture, and successive sampling steps refine the image until it's sharp and realistic—or at least, as real as the model can get for what you've prompted. Diffusion is usually a time-consuming process requiring lots of steps, but MIT researchers have found a better way.

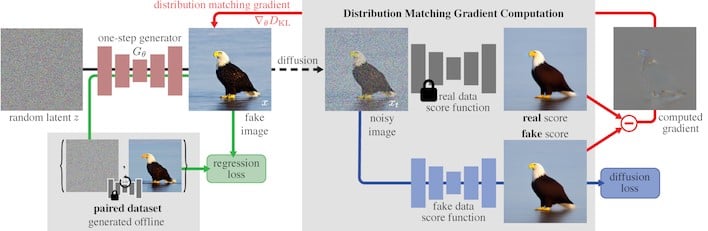

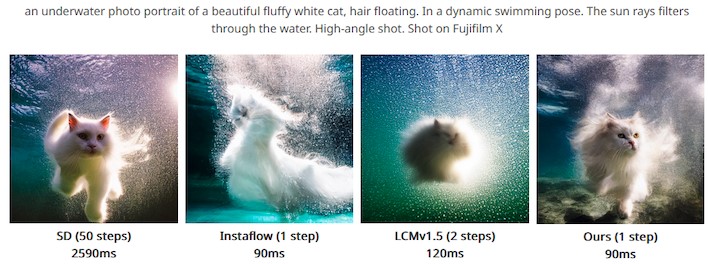

Using a new approach called "distribution matching distillation", researchers from MIT's Computer Science and Artificial Intelligence Laboratory have managed to reduce the diffusion process down to a single sampling step. Obviously, this is much faster than having to iterate on the image thirty to fifty times as you usually do with typical image diffusion processes. The results produced aren't bad, either; take a look:

There's a video, too, which evocatively showcases the performance improvement. While Stable Diffusion 1.5 takes around a second and a half to distill an image on modern hardware, MIT's new DMD-based model takes around five hundredths of a second. The video allows a few images from SD 1.5 to fill in before rapidly spitting out a whole litany of pictures from the new model. Check it out:

This isn't the first time people have tried to use diffusion distillation to accelerate image generation; models like Instaflow and LCM have tried the same thing as MIT's DMD with at-times questionable results. Like with most things in computing, it's a trade-off between quality and performance. MIT's new method seems to offer the best of both worlds, with a balance between speed and resolved image detail.

This also isn't the first time that people have figured out how to do diffusion in far fewer steps; notably, Stability AI itself created a model called Stable Diffusion Turbo that can generate 1-megapixel images in a single diffusion step, although the results tend to be better with a few extra steps. Stable Diffusion Turbo works in a very similar manner to MIT's "DMD" approach; it uses a smaller model trained on Stable Diffusion XL output to "lead" the Turbo model toward what the full SDXL model would produce.

We have to salute the efforts of developers working on AI technologies who release their work publicly. While image generators like DALL-E and Meta AI's Imagine can produce extremely impressive results, these groups are highly protective of their technology and jealously guard it from curious public eyes. Meanwhile, you can go read about MIT's findings at this link.