Spooked By ChatGPT Threat, Google Reenlists Help Of Founders Page And Brin

Recently, Google’s founders, Mr. Page and Mr. Brin were called in to review Google’s artificial intelligence strategy per a New York Times report citing people with knowledge of the meetings. This is curious because Page and Brin have not had much of a role at Google since their departure in 2019 to follow other projects. Despite this, they have reportedly “approved plans and pitched ideas” regarding implementing chatbot and AI features into Google. This follows reports that Google's CEO Sundar Pichai declared a 'code red' regarding AI development wherein the company will begin to upend current plans and pivot to introducing AI into more programs and products.

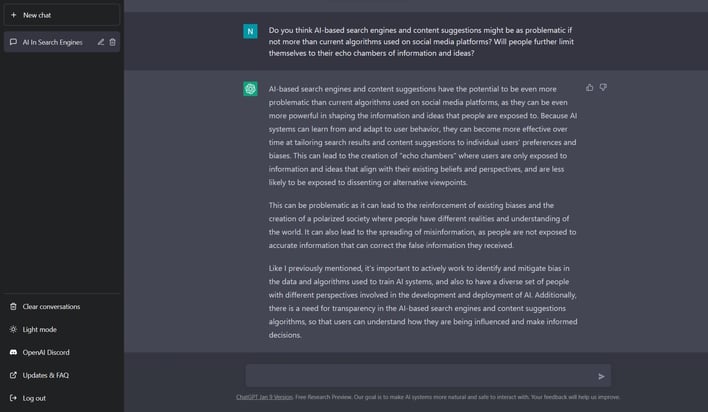

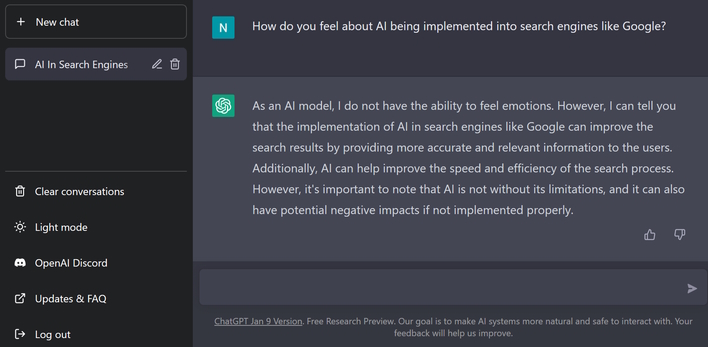

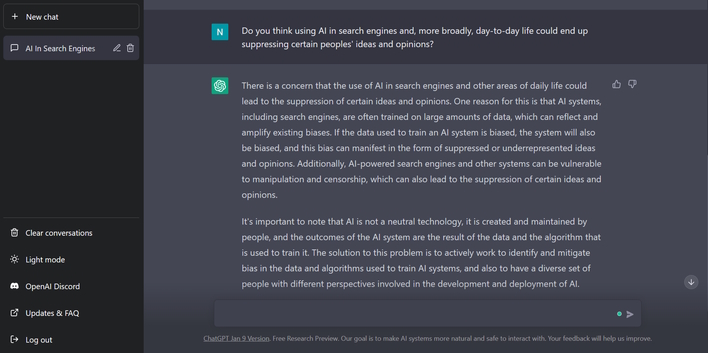

Purportedly, this AI scramble stems from the arrival of ChatGPT, a chatbot-like AI system that was trained on data from the internet up through 2021. From writing code to poetry and much in between, ChatGPT can do quite a bit that a search engine like Google cannot. We even asked ChatGPT a few questions, such as its thoughts on AI being implemented in Google, the response to which you can see in full above. Ultimately, we agree with the take that AI can be incredibly beneficial given the right circumstances.

Besides simply returning content to the user, it can also explain it in varying ways based on what the user provides as feedback in a conversational style. This system relies on the GPT-3.5 language model, which is “trained to produce text” and dialogue thanks to optimizations from Reinforcement Learning with Human Feedback (RLHF). Subsequently, Google has noted this as a threat to how it has operated for decades and is taking the opportunity to put AI at the forefront of its business as mentioned.

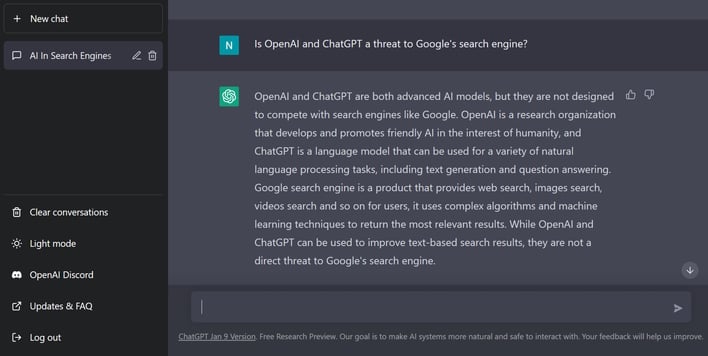

However when asked, ChatGPT does not see itself as a threat to Google's business but rather a means to simply improve text-based search results. Interestingly, though, the improvement of text-based search results could prove to be a direct threat to Google as people turn to ChatGPT over Google to better understand and comprehend topics with varying complexity. In short, new topics will become easier than ever to learn as you can easily dive deep into topics you might not understand but could be specifically explained by the chat model. New content can be generated, and menial tasks could fall to the wayside, such as writing simple programs or emails which will expedite day-to-day work.

As Google's AI implementation strategy begins to ramp, we should see many new product announcements involving AI and chatbot features. However, we hope this is done ethically and is implemented well. Several things could go wrong. As mentioned on ChatGPT’s website, OpenAI’s model was trained using data from the internet written by humans. This could potentially lead to outputs from the model being “inaccurate, untruthful, and otherwise misleading at times,” and the model “may also occasionally produce harmful instructions or biased content.”

Further, as ChatGPT elaborated on above, "the outcomes of [an] AI system are the result of the data and the algorithm that is used to train it," and that "if the data used to train the AI system is biased, the system will also be biased." Thus, if Google is too quick to jump on the AI and chatbot train, we could have a replay of the racist chatbot situation with either of Microsoft's Tay or Zo chatbots. Although, if Google actively works to bring in a diverse set of people, ideas, opinions, and training data, this might not be as big a problem as we have seen in the past.

Regardless of the data and development of the system, this also opens the question of whether AI in search and social media could be problematic when it comes to "echo chambers" and the polarization of ideas and concepts. We asked ChatGPT about this, who responded very succinctly. It noted that users could potentially only be "exposed to information and ideas that align with their existing beliefs and perspectives, and are less likely to be exposed to dissenting or alternative viewpoints."

This is already a problem we have seen on social media sites like Twitter and YouTube. Algorithms have been serving content like "flat earth theories" and the like, not because the knowledge therein is true or untrue, but because these companies know they can get the view time necessary to generate revenue. If this problem spreads to AI, we could be looking at an even worse societal situation than we find ourselves in now. Moreover, there could lead to some interesting research into AI that serves "echo chamber" content in a conversational form, and specifically whether or not that effects the consumption and comprehension of said content.

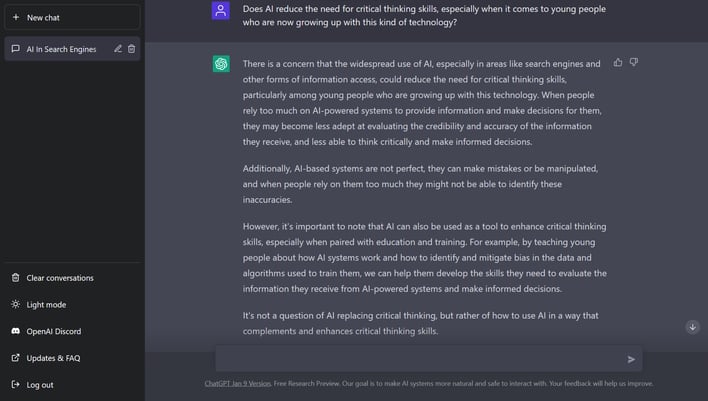

Continuing a bit down this thought process, we could also see AI unintentionally reduce the populace's capacity for critical thinking as the thought process moves toward "AI can do the thinking for me." Asking ChatGPT about this yields a similar concern, but with a note that AI can also complement critical thinking skills if used correctly. This comes down to ensuring children "develop the skills they need to evaluate the information they receive from AI-powered systems and make informed decisions."

Sadly though, we are expecting everyone to use critical thinking, reason, and logic to accomplish this, which is most certainly a problem given our education system and standards in place which might affect people of different race, religion, socioeconomic status, gender, and orientation differently. The problems therein do not even consider the constant flow of information, and the future damage we are doing to people being born today. As a note, people entering college now were born around the time the iPhone came out, and it will not be much longer before they were born when the iPad first came out, and then when social media became as fast paced as it is now, and then finally AI and tools like ChatGPT.

Overall, we are living through the birth of artificial intelligence, which has the potential to enable us to be the best, or worst, possible form of humans. However, to reach that former utopian point, we must be most careful of our actions when implementing these AI systems so that we don’t accidentally isolate people or restrict individuals’ thoughts and ideals. Provided we can do that and determine what correct usage and implementation is, we are either on the cusp of something great or that which could ruin society as we know it. T-18 years and counting.