NVIDIA Unleashes Quadro 6000 and 5000 Series GPUs

We'd like to cover a few

final data points before bringing this article to a close. Throughout

all of our benchmarking and testing, we monitored how much power our

test system was consuming using a power meter. Our goal was to give you

an idea as to how much power each configuration used while idling and

under a heavy workload. Please keep in mind that we were testing total

system power consumption at the outlet here, not just the power being

drawn by the graphics cards alone.

![]()

Power Consumption and Operating Temperatures

How low can you go?

With the power hungry reputation of NVIDIA's Fermi architecture, it comes as no surprise to see the Quadro 6000 pulling down a significantly more juice from the wall socket. Although it was relatively tame in an idle state, the test system required 446W during load with the 6000 videocard installed, 12% more than the FirePro V8800. The Quadro 5000 proved to be more green in its ways, requiring only 385W at load, or about 14% less power than the 6000 graphics card.

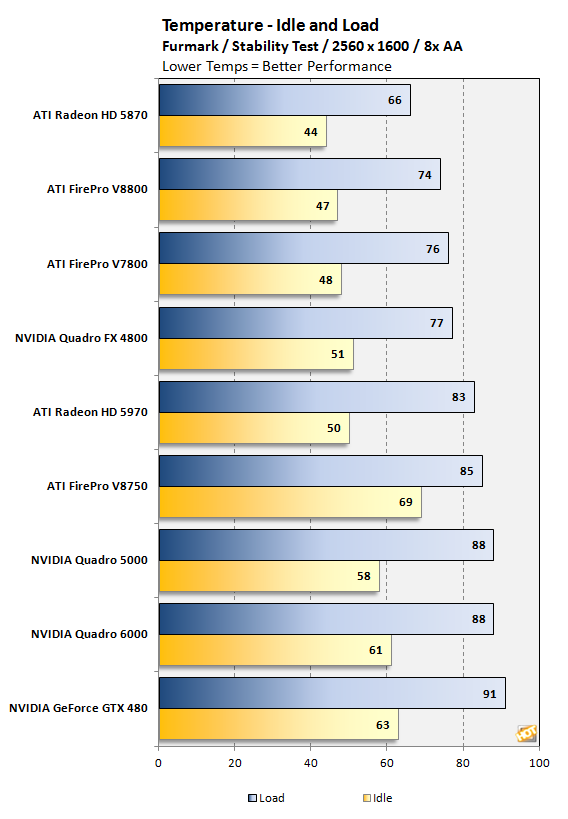

Much like their gaming cousin, the GTX 480, our Quadro workstation videocards run hot. Fully loaded, both cards max out at 88 degrees Celsius. That's just three degrees shy of the GTX 480's load temperature. In comparison, the V8800 operated 14 degrees cooler at full load.

The Quadro graphics cards feature dual slot cooling solutions that that provide a peaceful working environment during normal conditions. Fortunately, we did not experience any irritating fan noise from the cards throughout most of the benchmarks. Fan noise slightly increased a few minutes during SPEC testing, but easily within our comfort range. The Quadro 6000 became noisy only during temperature testing at full load. If you plan on operating your videocard at full load for long periods of time, consider choosing the Quadro 5000, which remained relatively quiet under all conditions.