NVIDIA Unleashes Quadro 6000 and 5000 Series GPUs

Not long ago, we reviewed the entire FirePro workstation graphics card lineup from ATI. With the V8800, our testing revealed considerable performance gains over the previous generation V8750, coupled with a lower price point. Surely, that's a combination that consumers can appreciate, especially for those looking to upgrade sooner, rather than later. But, at the time, the market was not yet settled as we anxiously awaited a response to ATI's FirePro products from NVIDIA. Thankfully, the wait is over as the launch of a new series of professional graphics cards from NVIDIA based on the company's Fermi architecture has just arrived.

Three new models arrive today to bolster the Quadro lineup and affirm NVIDIA's commitment to the professional workstation crowd. As we've mentioned, the new cards are based on NVIDIA's Fermi architecture which brings a new set of cutting edge features to the table. First, take note of the change in naming convention. These cards no longer use the FX designation, and are simply branded Quadro followed by the model number.

NVIDIA Quadro 6000 and 5000 Workstation Graphics Cards

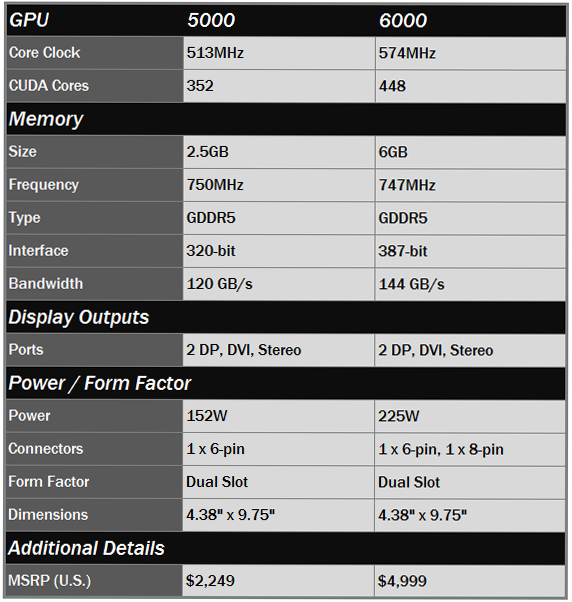

Today, we'll be looking at the two ultra high end models from NVIDIA, the Quadro 6000 and Quadro 5000 graphics cards. Both models offer an impressive list of specifications and features, but flaunt large price tags as well. Before we go into those details, we'd like to mention the card we aren't reviewing in this article, but is launching today as well. The Quadro 4000 offers 256 CUDA cores with 2GB of GDDR5 and offers 89.6GB/s memory bandwidth through a 256-bit interface. At $1,199, the 4000 replaces the FX 3800 within NVIDIA's lineup, while offering considerably more features. Out of these three cards, the Quadro 4000 is the most affordable Fermi-based option. Now for highlights on the Quadro 6000 and 5000 models, check out the chart below to find out what they have to offer...

|

One thing is certain looking at their specs--these cards mean business. They were specifically designed to meet the demands of professional designers, engineers, and scientists with a strong emphasis on visual computing with certified applications such as Maya, 3D Studio Max, and SolidWorks. When compared to mainstream GF100 based gaming video cards, we find the core and memory clock speeds here are much lower. We suspect this was done to ensure stability and extend longevity of the hardware components, and to provide quieter operation. But, we also find that NVIDIA has significantly beefed up memory capacity to extreme levels. So how does this combination of lower clocks and increased memory translate to the world of professional graphics? Before we get to the performance results, let's take a closer look at these cards themselves and find out what makes them different from the previous generation of Quadro graphics cards.