Turning still 2D photos into 3D rendered mesh models is not a quick or particularly easy task. Just the opposite, NVIDIA's vice president of graphics research, David Luebke, says achieving such a thing "has long been considered the holy grail unifying computer vision and computer graphics." Perhaps not for long.

NVIDIA is getting ready to present its 3D MoMa inverse rendering pipeline at the Conference on Computer Vision and Pattern Recognition in New Orleans. The promise of this AI-powered tool is that it could enable architects, designers, concept artists, game developers, and other professionals to make quick work out of importing an object into a graphics engine. Once inside, they could modify the scale, change the material, and toy around with different lighting effects, NVIDIA says.

"By formulating every piece of the inverse rendering problem as a GPU-accelerated differentiable component, the NVIDIA 3D MoMa rendering pipeline uses the machinery of modern AI and the raw computational horsepower of NVIDIA GPUs to quickly produce 3D objects that creators can import, edit and extend without limitation in existing tools,” Luebke explains.

From NVIDIA's vantage point, life is a whole lot easier for artists and engineers when a 3D object is in a form that can be dropped into popular editing tools, like game engines and the such. And that form is a triangle mesh with textured materials.

For the most part, creators and developers

create 3D objects using complex techniques, which is both time consuming and labor intensive. That's where advances in neural radiance fields can be a boon. In this case, NVIDIA claims a single

Tensor Core GPU can leverage its 3D MoMa tool to generate triangle mesh models in only an hour. The end result can then be important into popular game engines and modeling tools already in use for further manipulation.

"The pipeline’s reconstruction includes three features: a 3D mesh model, materials and lighting. The mesh is like a papier-mâché model of a 3D shape built from triangles. With it, developers can modify an object to fit their creative vision. Materials are 2D textures overlaid on the 3D meshes like a skin. And NVIDIA 3D MoMa’s estimate of how the scene is lit allows creators to later modify the lighting on the objects," NVIDIA explains.

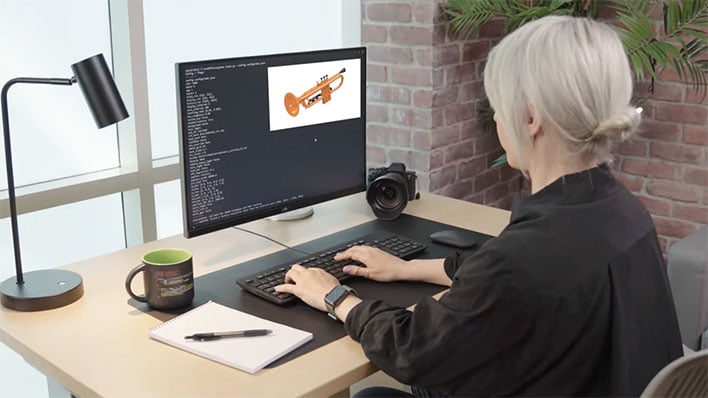

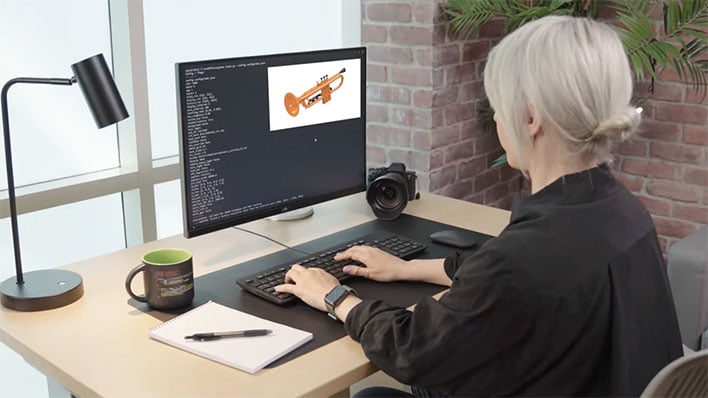

NVIDIA decided to showcase its

3D MoMa tool through a jazz demonstration, because "jazz is all about improvisation." The team collected around 500 images of five jazz instruments (100 each) from different angles, and then used 3D MoMa to reconstruct them into 3D mesh models.

As you can see in the video above, the instruments react to light as they would in the real world. For a deeper dive into the tech at play, you can check out a

paper NVIDIA published, which it is presenting today.