NVIDIA Editor's Day: The Re-Introduction of Tesla

When you visit NVIDIA’s Web site and hit the Products drop-down menu, a long list of the company’s offerings scrolls down in front of you—impressive for an organization that originally found notoriety by designing the fastest desktop display adapters.

Graphics processors for the desktop, workstation, and server space still dominate NVIDIA’s portfolio. But it’s also involved in notebooks, handhelds, software development, and more recently, high-performance computing.

For the uninitiated, high-performance computing (or HPC) has historically involved leveraging large clusters, which are used to crunch applications or algorithms demanding massive horsepower. Physics calculations, weather forecasting, manufacturing, medical imaging, cancer research, financial analysis—these are some of the fields with problems so complex that they require the cooperative efforts of HPC configurations.

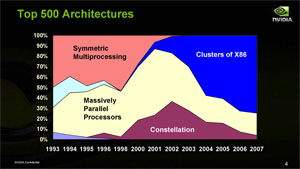

The top 500 architectures, broken down by classification |

Notice that the fastest supercomputers employ many-core designs |

The composition of the fastest 500 systems, maintained at top500.org, has changed fairly dramatically since the metric began in 1993. Back then, symmetric multiprocessing and massively parallel processors dominated the scene. And while some of the most powerful supercomputers today still leverage the strengths of MPP architecture, a majority of the top 500 now employ clusters of commodity x86 processors. That’s not a bad way to fill up a few racks worth of space while sucking down hundreds of kilowatts of power.

Rise of the GPU

The thing about multi-core CPUs is that they’re most effective when your problem isn’t known, thanks to tight execution pipelines and large caches. When the problem being addressed is known, as is often the case in HPC applications, the many-core engines driving the fastest supercomputers are much more potent.

NVIDIA's CUDA programming environment, catching on with a number of enterprises |

The Tesla T10 processor sports double precision floating-point, a first for NVIDIA |

Care for an example of a many-core processor in action on your desktop? Just look to the GeForce 8-series or Radeon HD 3000-series cards. Each centers on an architecture designed for the extremely parallel problem of graphics rendering. However, with the help of a software programming environment called CUDA, NVIDIA is showing the HPC market how to take advantage of its GPU’s massive floating-point horsepower for more general purpose computing. One specialized application at a time, developers seem to be catching on.

The latest example of NVIDIA’s many-core processors taking the place of computing clusters comes from the University of Antwerp, where researchers studying tomography (imaging by sections, as in CT scans) built up a desktop machine with four GeForce 9800 GX2 graphics cards. According to Dr. K. J. Batenburg, a researcher on the project, just one of the eight GPUs in his system (named FASTRA), is up to 40 times more powerful than a PC in the small cluster he previously had running tomographical reconstructions.

CUDA is very similar to standard C programming with a couple of extensions |

A projection of cost, power, and space savings with GPU computing |

We recently had the opportunity to visit NVIDIA’s Santa Clara campus for more information on the company’s HPC efforts, and learned from Dan Vivoli, executive vice president of marketing at NVIDIA, that FASTRA is equivalent to about eight tons of rack-mount equipment using 230KW of power, all in a package that’d cost roughly $6,000 to build. During the course of our day we heard from five of NVIDIA’s partners who are now using the company’s graphics technology to accelerate complex problems that were either not possible to affordably solve before, or simply took much longer to address than they do now.