Fusion-io vs Intel X25-M SSD RAID, Grudge Match Review

Competitors: Fusion-io's160GB ioDrive

As we mentioned, a disruptive technology is something that not only sets a new standard of performance, features, or both, but often does so in a way that drives a complete resurfacing of the competitive landscape. Clearly, that's what NAND Flash storage technology is affording us and there are some rather bright minds out there looking to take advantage of the opportunity. Fusion-io currently offers an enterprise-class PCI Express SSD card that boasts ridiculously high read and write throughput numbers, but let's consider why and how for a bit before taking a closer at the technology.

First, let's look at what exists in current desktop storage architectures (just to keep it simple) and how things are hooked up. Currently, whether you're plugging in an SSD or standard spinning hard drive, you're working with two interfaces to get the data off the drive to the host processor. The SATA (Serial ATA) interface needs to be accommodated so the drive can be accessed via the legacy SATA command set. Over the years, though the ATA command set has migrated from PATA (Parallel ATA) to a higher speed serial interface, the low level command set hasn't changed in order to maintain backwards compatibility. Traditionally, the Southbridge controller on the motherboard (or perhaps on a discrete SATA controller) offers a number of SATA ports to attach your hard drives too. But how does the SATA controller bolt up to the rest of the system architecture?

It's called bridging. The SATA controller, whether it resides on a discrete card or in the Southbridge chipset, has to be bridged to PCI Express or other native interface so the host CPU can have access to the data. It's not rocket science obviously. That's why they call it a SouthBRIDGE. However, what does bridging do for us, besides affording the host processor the ability to talk to an otherwise foreign or "not native" interface (SATA) over a native one (PCIe, etc.)? In short, nothing. It just adds latency and slows things down. Even when bridging two high speed serial interfaces together like SATA and PCI Express, you're adding latency going from one domain to the other. It's that simple. Again, this is a necessary evil however, because we're not going to just rip out generations of ATA command set compatibility that easily. And yes, an even faster 6Gb/sec third generation SATA interface is coming.

However, with solid state technology, like NAND Flash, at our disposal, it becomes much easier to just bolt up to the native PCI Express interface and eliminate the latencies of bridging to SATA, as well as the current bandwidth limitations of 3Gb/sec SATA, which SSDs are already very close to saturating. It is with this disruptive approach that Fusion-io has entered the market.

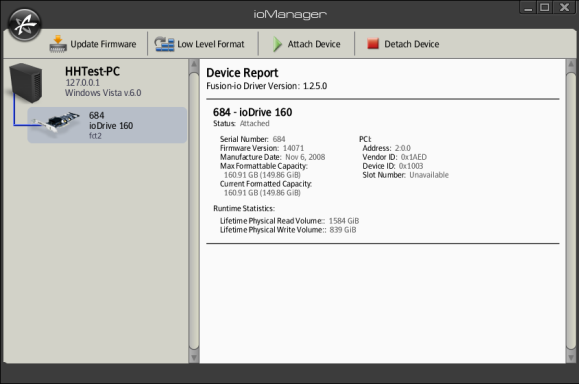

Fusion-io's ioManager Control Panel

Low-level formats performed before each benchmark run...

First, let's look at what exists in current desktop storage architectures (just to keep it simple) and how things are hooked up. Currently, whether you're plugging in an SSD or standard spinning hard drive, you're working with two interfaces to get the data off the drive to the host processor. The SATA (Serial ATA) interface needs to be accommodated so the drive can be accessed via the legacy SATA command set. Over the years, though the ATA command set has migrated from PATA (Parallel ATA) to a higher speed serial interface, the low level command set hasn't changed in order to maintain backwards compatibility. Traditionally, the Southbridge controller on the motherboard (or perhaps on a discrete SATA controller) offers a number of SATA ports to attach your hard drives too. But how does the SATA controller bolt up to the rest of the system architecture?

It's called bridging. The SATA controller, whether it resides on a discrete card or in the Southbridge chipset, has to be bridged to PCI Express or other native interface so the host CPU can have access to the data. It's not rocket science obviously. That's why they call it a SouthBRIDGE. However, what does bridging do for us, besides affording the host processor the ability to talk to an otherwise foreign or "not native" interface (SATA) over a native one (PCIe, etc.)? In short, nothing. It just adds latency and slows things down. Even when bridging two high speed serial interfaces together like SATA and PCI Express, you're adding latency going from one domain to the other. It's that simple. Again, this is a necessary evil however, because we're not going to just rip out generations of ATA command set compatibility that easily. And yes, an even faster 6Gb/sec third generation SATA interface is coming.

However, with solid state technology, like NAND Flash, at our disposal, it becomes much easier to just bolt up to the native PCI Express interface and eliminate the latencies of bridging to SATA, as well as the current bandwidth limitations of 3Gb/sec SATA, which SSDs are already very close to saturating. It is with this disruptive approach that Fusion-io has entered the market.

|

|

|

| Capacity | 160GB (80GB and 320GB MLC available) |

| NAND Flash Components | Single-Level Cell (SLC) NAND Flash Memory |

| Bandwidth | Up to 750MB/s Read Speeds Up to 650MB/s Write Speeds |

| Read Latency | 50 microseconds |

| Interface | PCI-Express X4 |

| Form factor | Half Height PCIe Card |

| Life expectancy | 48yrs - at 5TB write-erase/day |

| Power consumption | Meets PCI Express x4 power spec 1.1 |

| Operating temperature | -40°C to +70°C |

| ROHS Compliance | Meets the requirements of EU RoHS Compliance Directive |

To look at the card itself you'll note its pure simplicity and elegance. There are but a few passive components along with a bunch of Samsung SLC NAND Flash and Fusion-io's proprietary ioDrive controller. The 160GB card we tested above is a half-height PCI Express X4 implementation. From a silicon content standpoint it's a model of efficiency, though the PCB itself is a 16 layer board, which is definitely a complex quagmire of routing for all those NAND chips.

In totality, the solution, along with its Samsung flash memory, is specified as offering up to 750MB/sec of available read operation throughput and 650MB/sec for writes. You'll also note that the ioDrive is rated for 50 microseconds read latency, which is pretty much standard for SLC flash-based SSDs these days. If you consider the average standard hard drive is specified for 8 - 15 milliseconds access times, it's obvious SSD technology is orders of magnitude faster for random access requests.

ioDrive controller block diagram

Dropping down to the block diagram of the ioDrive's controller, we see the PCIe Gen1 X4 interface on the card offers 10Gbps of bandwidth, bi-directional (20Gbps total), which obviously offers more than enough headroom for data throughput as NAND Flash technology continues to scale. The picture above is an over-simplification, however, and the real magic of Fusion-io's proprietary technology resides in the "Flash Block Manager" block of this diagram. The design implements a 25 parallel channel (X8 banks) memory architecture, with one channel dedicated to error detection and correction, as well as self-healing (data recovery and remapping) capabilities for the flash memory at the cell level. By way of comparison, Intel's X25-M SSD implements a 10 channel design.In totality, the solution, along with its Samsung flash memory, is specified as offering up to 750MB/sec of available read operation throughput and 650MB/sec for writes. You'll also note that the ioDrive is rated for 50 microseconds read latency, which is pretty much standard for SLC flash-based SSDs these days. If you consider the average standard hard drive is specified for 8 - 15 milliseconds access times, it's obvious SSD technology is orders of magnitude faster for random access requests.

Fusion-io's ioManager Control Panel

Low-level formats performed before each benchmark run...

Above is a screen shot of Fusion-io's rather simplistic ioManager software tool for managing the ioDrive volume in our Windows Vista 64-bit installation. We should note that there are three options for configuring the drive - 1: Max Capacity, 2: Improved Write Performance (at the cost of approximately 50% capacity) and 3: Maximum Write Performance (at the cost of approximately 70% capacity). We low-level formatted the ioDrive before each new benchmark test with option 1, since we felt this would likely be the most common usage model.