Intel Xeon Processor E5 v4 Family Debut: Dual E5-2697 v4 With 72 Threads Tested

Xeon E5 v4 Processor Family Introduction

Intel is officially launching a brand new series of Xeon processors today, the Xeon Processor E5 v4. Unlike mainstream desktop products, which feature Intel’s latest core technologies like the Skylake-based Core i7-6700K, mission-critical, big-iron parts like the Xeon E5 v4 series are more complex and go through much more qualification, and as such tend to leverage core technologies that have long been proven in the consumer space. The the Xeon Processor E5 v4 family, for example, is based on Broadwell, or more specifically Broadwell-EP. We’ve already got a plethora of Broadwell coverage up here at HH, so we won’t go into detail again. But if you’d like a refresher, here are some links to our desktop and mobile Broadwell launch articles.

Intel is officially launching a brand new series of Xeon processors today, the Xeon Processor E5 v4. Unlike mainstream desktop products, which feature Intel’s latest core technologies like the Skylake-based Core i7-6700K, mission-critical, big-iron parts like the Xeon E5 v4 series are more complex and go through much more qualification, and as such tend to leverage core technologies that have long been proven in the consumer space. The the Xeon Processor E5 v4 family, for example, is based on Broadwell, or more specifically Broadwell-EP. We’ve already got a plethora of Broadwell coverage up here at HH, so we won’t go into detail again. But if you’d like a refresher, here are some links to our desktop and mobile Broadwell launch articles.There is a large assortment of Xeon E5 v4 series processors en route targeting a wide array of market segments, from high-performance professional workstations to multi-socket servers for big data analytics. The Xeon E5 v4 series processors supplant last year’s E5 v3 series, which was based on Haswell-EP. The v3 and v4 families share many similarities, however.

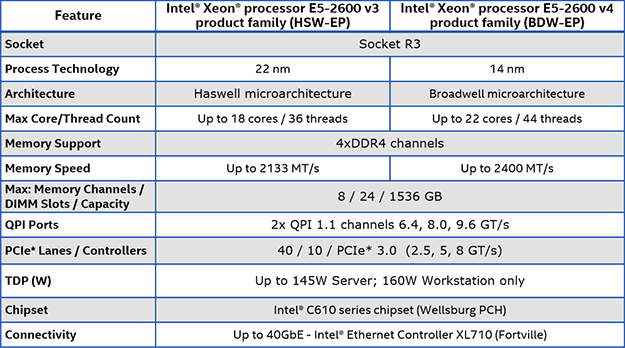

The Xeon E5 v4 series processors is socket compatible with the v3 series, but features a number of updates and enhancements. The Broadwell-EP based Xeon E5 v4, for example, uses Intel’s more advanced 14nm process node and the biggest of the chips can feature up to 22 processor cores (44 threads). The E5 v4 series still supports up to quad-channel DDR4 memory, but the maximum supported speed now tops out at 2400MT/s, up from 2133MT/s. The rest of the platform remains mostly unchanged, from TDPs to PCI Express connectivity.

Most of the same information is conveyed in the chart above, but there are some additional details worth pointing out. Because of its additional cores, the E5-2600 v4 series now features up to 55MB of last-level cache. Support for 3D die stacked LRDIMMs has been added, along with DDR4 write CRC, and of course the higher speeds. Though, with three 3DS LRDIMMs per channel, the max supported frequency drops down to 1600MHz.

Also, like Haswell-EP, Broadwell-EP has a two ring bus structure, with QPI and IO at the top of the die and memory controller at the bottom. But with the newer Broadwell-EP, the rings are symmetric – Haswell-EP has some asymmetric configurations. A big, 22 core Broadwell-EP chip, for example, will have 11 cores per ring (with 2.5MB cache per core). We should mention, however, that there are actually 24 total cores on die, though at this time there are no E5 v4 Xeon SKUs with all 24 cores enabled. Intel also notes that it’s about a 5-cycle delay to hop from one ring to another, but effectively the chip's entire 55MB cache (or whatever amount of cache is enabled on a particular model) is accessible by any core and either ring.

The changes to the Xeon E5 V4 family’s memory configuration result in reduced latency and increased bandwidth. Intel’s numbers show up to a 15% increase in bandwidth with latency reductions across the board. We’ve got some in-house numbers on the pages ahead to prove-out the improved memory performance on the new platform.

In addition to these high-level updates, there are also new virtualization and security related features coming with the Xeon E5 v4 series, along with more performance and efficiency enhancements as well. One of the new features is support for Posted Interrupts. Posted interrupts works with APIC Virtualization to reduce latency of data access, because interrupt handling for virtual CPUs is delivered directly. Posted interrupts can speed up VM enters / exits, among other things.

Finally, there is also new Resource Director Technology, or RDT, available with Broadwell-EP based Xeon E5 v4 series processors. With RDT, operating systems and virtual machine managers can monitor and manage shared platform resources in fine grained detail, right down to memory bandwidth or cache allocation. RDT allows for the partitioning of resources on a per-application or per-VM basis, and works by assigning RMID attributes to a particular thread, which can then be managed with RDT.