IBM Researchers Create Software for Programming Chips that Mimic Human Brain Function

Forget about artificial intelligence, researchers at IBM are working on a software ecosystem designed for programming silicon chips that could mimic human brain functions such as perception, action, and cognition. IBM said its solution is "dramatically different" from others before it, noting that it's tailored for a new class of distributed, highly interconnected, asynchronous, parallel, large-scale, cognitive computing architectures.

"Architectures and programs are closely intertwined and a new architecture necessitates a new programming paradigm," said Dr. Dharmendra S. Modha, Principal Investigator and Senior Manager, IBM Research. "We are working to create a FORTRAN for synaptic computing chips. While complementing today’s computers, this will bring forth a fundamentally new technological capability in terms of programming and applying emerging learning systems."

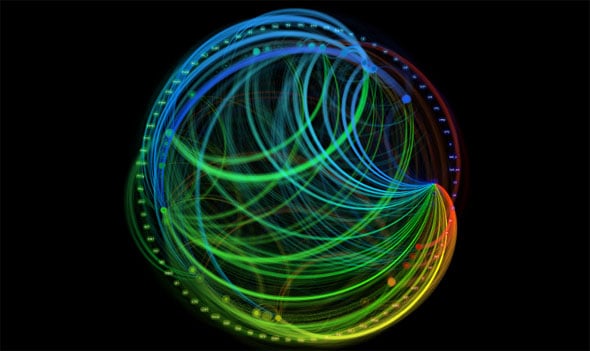

Visualization of a simulated network of neurosynaptic chips. Image Source: IBM

Wading through the geek-speak, IBM's path to so-called SyNAPSE chips involves a retooling of fundamental computing. Today's systems are excellent number crunchers capable of delivering fast and precise results, but they're also constrained by power and size, and are less effective when dealing with real-time processing of "noisy, analog, voluminous, Big Data produced by the world around us," IBM says.

The human brain, meanwhile, operates much slower (comparatively) and at low precision. It excels at tasks such as recognizing, interpreting, and acting upon patterns, and it does it while consuming the same amount of power as a 20 watt light bulb and occupying the volume of a two-liter bottle.

IBM's chip architecture would mimic the brain by giving each neurosynaptic core its own memory ("synapses"), processors ("neurons"), and communication ("axons") in close proximity, all working together in an event-driven fashion, IBM says. The chips would create a platform for emulation and extending the brain's ability to respond to biological sensors and analyzing hordes of data from multiple sources at the same time.

"Architectures and programs are closely intertwined and a new architecture necessitates a new programming paradigm," said Dr. Dharmendra S. Modha, Principal Investigator and Senior Manager, IBM Research. "We are working to create a FORTRAN for synaptic computing chips. While complementing today’s computers, this will bring forth a fundamentally new technological capability in terms of programming and applying emerging learning systems."

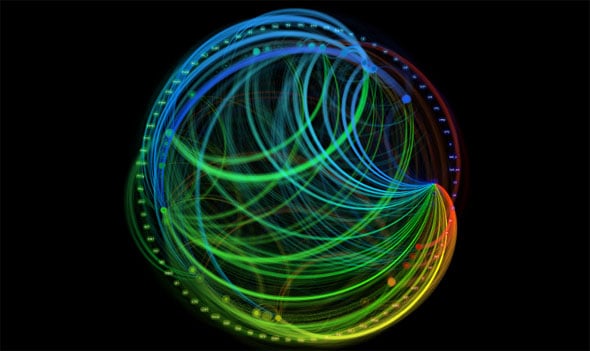

Visualization of a simulated network of neurosynaptic chips. Image Source: IBM

Wading through the geek-speak, IBM's path to so-called SyNAPSE chips involves a retooling of fundamental computing. Today's systems are excellent number crunchers capable of delivering fast and precise results, but they're also constrained by power and size, and are less effective when dealing with real-time processing of "noisy, analog, voluminous, Big Data produced by the world around us," IBM says.

The human brain, meanwhile, operates much slower (comparatively) and at low precision. It excels at tasks such as recognizing, interpreting, and acting upon patterns, and it does it while consuming the same amount of power as a 20 watt light bulb and occupying the volume of a two-liter bottle.

IBM's chip architecture would mimic the brain by giving each neurosynaptic core its own memory ("synapses"), processors ("neurons"), and communication ("axons") in close proximity, all working together in an event-driven fashion, IBM says. The chips would create a platform for emulation and extending the brain's ability to respond to biological sensors and analyzing hordes of data from multiple sources at the same time.