NVIDIA Unveils Tegra X1-Powered Drive CX and PX Automotive Computing Platforms

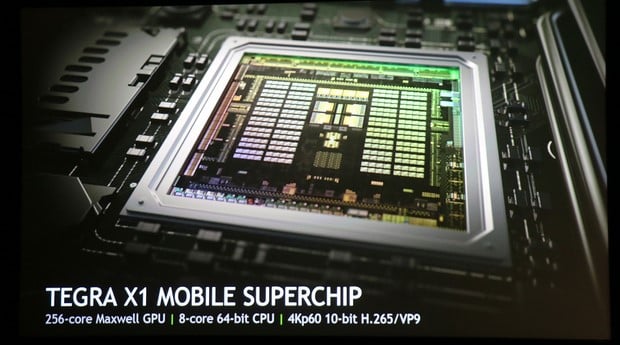

NVIDIA CEO Jen Hsun Huang hosted a press conference at the Four Seasons Hotel in Las Vegas this evening, to officially kick off the company’s Consumer Electronics Show activities. Jen Hsun began the press conference with a bit of back story on the Tegra K1 and how it took NVIDIA approximately 2 years to get Kepler-class graphics into Tegra, but that it was able to cram a Maxwell-derived GPU into the just announced Tegra X1 in just a few months. We’ve got more details regarding the Tegra X1 in this post, complete with pictures of the chip and reference platform, game demos, benchmarks and video of the Tegra X1 in action with a variety of workloads, including 4K 60 FPS video playback.

Over and above what we talked about in our hands-on with the Tegra X1, Jen Hsun showed a handful of demos powered by the chip. In a demo featuring the Unreal Engine 4, NVIDIA showed the Tegra X1—in a roughly 10 watt power envelope—running the Unreal Engine 4 Elemental demo. The Maxwell-based GPU in the SoC not only has the horsepower to run such a complex graphics demo, but the features and API support to render some of the more complex effects. Jen Hsun’s main call out with the demo was that this same demo was used to showcase the Xbox One last year, but the Xbox One consumes roughly 10x the power. Note that a 10 watt Tegra X1 would likely be clocked much higher than the version of the chip that will find its way into tablets.

Jen Hsun also disclosed that the Tegra X1 has FP16 support and is capable of just over 1TFLOPS of compute performance. Jen Hsun said that kind of performance isn’t necessary for smartphones at this point, but went on to talk about a number of automotive-related applications and rich auto displays that could leverage the Tegra X1’s capabilities. NVIDIA’s CEO then unveiled the NVIDIA Drive CX Digital Cockpit Computer featuring the Tegra X1. The Drive CX can push up to a 16.6Mpixel max resolution, which is equivalent to roughly two 4K displays. But keep in mind that all of those pixels don’t have to reside on a single display—multiple displays can be used to add touch-screens to different area in the car or power back-seat entertainment systems with individual screens, etc.

The NVIDIA Drive CX is complemented by some new software dubbed NVIDIA Drive Studio, which is a design suite meant for developing in-car infotainment systems. The NVIDIA Drive Studio software suite encompasses everything from multi-media playback, navigation, text to speech, climate control, and anything else necessary for automotive applications. In a demo showing the Drive CX and Studio software in action, Jen Hsun showed a basic media player on-screen with a fully-3D navigation systems, with a Tron-like theme, complete with accurate lighting, ambient occlusion, GPU rendered vectors, and other advanced effects. The demo also included full Android running “in the car”, a surround-view camera system, and a customizable high-resolution digital cluster system, using physically based rendering. The graphics fidelity offered by the Drive CX system was excellent, and clearly superior to anything we’ve seen before with other in-car infotainment systems.

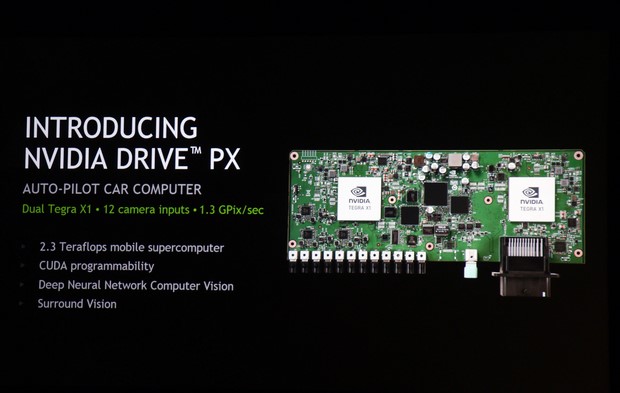

The automotive-related talk then evolved into a discussion regarding autonomous driving cards, environmental and situational awareness, path-finding, and learning. Jen Hsun then unveiled the NVIDIA Drive PX Auto-Pilot platform, which is powered by not one, but two Tegra X1 chips. The Tegra X1s on in tandem or in a redundant configuration, can connect to up to 12 high-definition camera, and can process up to 1.3Gpixels/s. The dual Tegra X1 chips offer up to 2.3 TFLOPS of compute performance, can record dual 4K streams at 30Hz, and leverage a technology NVIDIA is calling Deep Neural Network Computer Vision.

At a high-level, the NVIDIA Drive PX works like this: Camera data is brought into the Drive PX through a crossbar, and data is then fed to the correct block inside the platform for whatever workload is prescribed. The Drive PX then uses GPU accelerated “deep learning” to do things like identify objects, i.e. computer vision, and assess situations and environments. Bits of data reside in what amount to “neurons”, which are all linked by “synapses”, and the network is trained to compare and compile those bits of data to learn what they actually are. These neural networks, for example, may contain bits of data of headlights, wheels, geometric shapes, etc., which when combined tell the neural network its seeing a car. The bits of data could be body parts like arms, legs, and a torso, to detect humans.

NVIDA then showed a demo of the Drive PX platform in action, after only a few weeks of training. The demo showed the setup detecting crosswalk signs, to identify areas with high pedestrian traffic. They also showed speed limit-sign detection and pedestrian detection. NVIDIA also showed the Drive PX doing more difficult detection, however, of things like occluded pedestrians (say, if someone is walking between cars) and of the platform reading signs in poorly lit, nighttime environments. The Drive PX was so precise, it was even able to detect and alert the driver to upcoming traffic cameras, brake lights, and congestion. We should also mention that these demos weren’t exclusive to detecting singular things—the platform detected many things simultaneously and was able to alert drives to upcoming traffic and police cars (or other emergency vehicles) coming from behind. It is even smart enough to detect different vehicle types and situations to make specific driving recommendations. For example, if a work truck is detected ahead at the side of the road, the driver could be altered to move over.

To quantify the Tegra X1s performance in the context of neural networks and computer vision, Jen Hsun also talked about the AlexNet test, which uses ImageNet classi?cation with deep convolutional neural networks for object detection. The test uses 60 million parameters and 650,000 neurons to classify 1000 different items. When running the test, the Tegra X1 is able to recognize 30 images per second. For comparison, the Tegra K1 could only manage about 12 images per second.

There was no GeForce news from NVIDIA just yet, but CES hasn’t officially started. Stay tuned to HotHardware for more from the show in the days ahead.

NVIDIA CEO Jen Hsun Huang hosted a press conference at the Four Seasons Hotel in Las Vegas this evening, to officially kick off the company’s Consumer Electronics Show activities. Jen Hsun began the press conference with a bit of back story on the Tegra K1 and how it took NVIDIA approximately 2 years to get Kepler-class graphics into Tegra, but that it was able to cram a Maxwell-derived GPU into the just announced Tegra X1 in just a few months. We’ve got more details regarding the Tegra X1 in this post, complete with pictures of the chip and reference platform, game demos, benchmarks and video of the Tegra X1 in action with a variety of workloads, including 4K 60 FPS video playback.

Over and above what we talked about in our hands-on with the Tegra X1, Jen Hsun showed a handful of demos powered by the chip. In a demo featuring the Unreal Engine 4, NVIDIA showed the Tegra X1—in a roughly 10 watt power envelope—running the Unreal Engine 4 Elemental demo. The Maxwell-based GPU in the SoC not only has the horsepower to run such a complex graphics demo, but the features and API support to render some of the more complex effects. Jen Hsun’s main call out with the demo was that this same demo was used to showcase the Xbox One last year, but the Xbox One consumes roughly 10x the power. Note that a 10 watt Tegra X1 would likely be clocked much higher than the version of the chip that will find its way into tablets.

Jen Hsun also disclosed that the Tegra X1 has FP16 support and is capable of just over 1TFLOPS of compute performance. Jen Hsun said that kind of performance isn’t necessary for smartphones at this point, but went on to talk about a number of automotive-related applications and rich auto displays that could leverage the Tegra X1’s capabilities. NVIDIA’s CEO then unveiled the NVIDIA Drive CX Digital Cockpit Computer featuring the Tegra X1. The Drive CX can push up to a 16.6Mpixel max resolution, which is equivalent to roughly two 4K displays. But keep in mind that all of those pixels don’t have to reside on a single display—multiple displays can be used to add touch-screens to different area in the car or power back-seat entertainment systems with individual screens, etc.

The NVIDIA Drive CX is complemented by some new software dubbed NVIDIA Drive Studio, which is a design suite meant for developing in-car infotainment systems. The NVIDIA Drive Studio software suite encompasses everything from multi-media playback, navigation, text to speech, climate control, and anything else necessary for automotive applications. In a demo showing the Drive CX and Studio software in action, Jen Hsun showed a basic media player on-screen with a fully-3D navigation systems, with a Tron-like theme, complete with accurate lighting, ambient occlusion, GPU rendered vectors, and other advanced effects. The demo also included full Android running “in the car”, a surround-view camera system, and a customizable high-resolution digital cluster system, using physically based rendering. The graphics fidelity offered by the Drive CX system was excellent, and clearly superior to anything we’ve seen before with other in-car infotainment systems.

The automotive-related talk then evolved into a discussion regarding autonomous driving cards, environmental and situational awareness, path-finding, and learning. Jen Hsun then unveiled the NVIDIA Drive PX Auto-Pilot platform, which is powered by not one, but two Tegra X1 chips. The Tegra X1s on in tandem or in a redundant configuration, can connect to up to 12 high-definition camera, and can process up to 1.3Gpixels/s. The dual Tegra X1 chips offer up to 2.3 TFLOPS of compute performance, can record dual 4K streams at 30Hz, and leverage a technology NVIDIA is calling Deep Neural Network Computer Vision.

At a high-level, the NVIDIA Drive PX works like this: Camera data is brought into the Drive PX through a crossbar, and data is then fed to the correct block inside the platform for whatever workload is prescribed. The Drive PX then uses GPU accelerated “deep learning” to do things like identify objects, i.e. computer vision, and assess situations and environments. Bits of data reside in what amount to “neurons”, which are all linked by “synapses”, and the network is trained to compare and compile those bits of data to learn what they actually are. These neural networks, for example, may contain bits of data of headlights, wheels, geometric shapes, etc., which when combined tell the neural network its seeing a car. The bits of data could be body parts like arms, legs, and a torso, to detect humans.

NVIDIA then showed a demo of the Drive PX platform in action, after only a few weeks of training. The demo showed the setup detecting crosswalk signs, to identify areas with high pedestrian traffic. They also showed speed limit-sign detection and pedestrian detection. NVIDIA also showed the Drive PX doing more difficult detection, however, of things like occluded pedestrians (say, if someone is walking between cars) and of the platform reading signs in poorly lit, nighttime environments. The Drive PX was so precise, it was even able to detect and alert the driver to upcoming traffic cameras, brake lights, and congestion. We should also mention that these demos weren’t exclusive to detecting singular things—the platform detected many things simultaneously and was able to alert drives to upcoming traffic and police cars (or other emergency vehicles) coming from behind. It is even smart enough to detect different vehicle types and situations to make specific driving recommendations. For example, if a work truck is detected ahead at the side of the road, the driver could be altered to move over.

To quantify the Tegra X1s performance in the context of neural networks and computer vision, Jen Hsun also talked about the AlexNet test, which uses ImageNet classi?cation with deep convolutional neural networks for object detection. The test uses 60 million parameters and 650,000 neurons to classify 1000 different items. When running the test, the Tegra X1 is able to recognize 30 images per second. For comparison, the Tegra K1 could only manage about 12 images per second.

There was no GeForce news from NVIDIA just yet, but CES hasn’t officially started. Stay tuned to HotHardware for more from the show in the days ahead.

Over and above what we talked about in our hands-on with the Tegra X1, Jen Hsun showed a handful of demos powered by the chip. In a demo featuring the Unreal Engine 4, NVIDIA showed the Tegra X1—in a roughly 10 watt power envelope—running the Unreal Engine 4 Elemental demo. The Maxwell-based GPU in the SoC not only has the horsepower to run such a complex graphics demo, but the features and API support to render some of the more complex effects. Jen Hsun’s main call out with the demo was that this same demo was used to showcase the Xbox One last year, but the Xbox One consumes roughly 10x the power. Note that a 10 watt Tegra X1 would likely be clocked much higher than the version of the chip that will find its way into tablets.

Jen Hsun also disclosed that the Tegra X1 has FP16 support and is capable of just over 1TFLOPS of compute performance. Jen Hsun said that kind of performance isn’t necessary for smartphones at this point, but went on to talk about a number of automotive-related applications and rich auto displays that could leverage the Tegra X1’s capabilities. NVIDIA’s CEO then unveiled the NVIDIA Drive CX Digital Cockpit Computer featuring the Tegra X1. The Drive CX can push up to a 16.6Mpixel max resolution, which is equivalent to roughly two 4K displays. But keep in mind that all of those pixels don’t have to reside on a single display—multiple displays can be used to add touch-screens to different area in the car or power back-seat entertainment systems with individual screens, etc.

The NVIDIA Drive CX is complemented by some new software dubbed NVIDIA Drive Studio, which is a design suite meant for developing in-car infotainment systems. The NVIDIA Drive Studio software suite encompasses everything from multi-media playback, navigation, text to speech, climate control, and anything else necessary for automotive applications. In a demo showing the Drive CX and Studio software in action, Jen Hsun showed a basic media player on-screen with a fully-3D navigation systems, with a Tron-like theme, complete with accurate lighting, ambient occlusion, GPU rendered vectors, and other advanced effects. The demo also included full Android running “in the car”, a surround-view camera system, and a customizable high-resolution digital cluster system, using physically based rendering. The graphics fidelity offered by the Drive CX system was excellent, and clearly superior to anything we’ve seen before with other in-car infotainment systems.

The automotive-related talk then evolved into a discussion regarding autonomous driving cards, environmental and situational awareness, path-finding, and learning. Jen Hsun then unveiled the NVIDIA Drive PX Auto-Pilot platform, which is powered by not one, but two Tegra X1 chips. The Tegra X1s on in tandem or in a redundant configuration, can connect to up to 12 high-definition camera, and can process up to 1.3Gpixels/s. The dual Tegra X1 chips offer up to 2.3 TFLOPS of compute performance, can record dual 4K streams at 30Hz, and leverage a technology NVIDIA is calling Deep Neural Network Computer Vision.

At a high-level, the NVIDIA Drive PX works like this: Camera data is brought into the Drive PX through a crossbar, and data is then fed to the correct block inside the platform for whatever workload is prescribed. The Drive PX then uses GPU accelerated “deep learning” to do things like identify objects, i.e. computer vision, and assess situations and environments. Bits of data reside in what amount to “neurons”, which are all linked by “synapses”, and the network is trained to compare and compile those bits of data to learn what they actually are. These neural networks, for example, may contain bits of data of headlights, wheels, geometric shapes, etc., which when combined tell the neural network its seeing a car. The bits of data could be body parts like arms, legs, and a torso, to detect humans.

NVIDIA then showed a demo of the Drive PX platform in action, after only a few weeks of training. The demo showed the setup detecting crosswalk signs, to identify areas with high pedestrian traffic. They also showed speed limit-sign detection and pedestrian detection. NVIDIA also showed the Drive PX doing more difficult detection, however, of things like occluded pedestrians (say, if someone is walking between cars) and of the platform reading signs in poorly lit, nighttime environments. The Drive PX was so precise, it was even able to detect and alert the driver to upcoming traffic cameras, brake lights, and congestion. We should also mention that these demos weren’t exclusive to detecting singular things—the platform detected many things simultaneously and was able to alert drives to upcoming traffic and police cars (or other emergency vehicles) coming from behind. It is even smart enough to detect different vehicle types and situations to make specific driving recommendations. For example, if a work truck is detected ahead at the side of the road, the driver could be altered to move over.

To quantify the Tegra X1s performance in the context of neural networks and computer vision, Jen Hsun also talked about the AlexNet test, which uses ImageNet classi?cation with deep convolutional neural networks for object detection. The test uses 60 million parameters and 650,000 neurons to classify 1000 different items. When running the test, the Tegra X1 is able to recognize 30 images per second. For comparison, the Tegra K1 could only manage about 12 images per second.

There was no GeForce news from NVIDIA just yet, but CES hasn’t officially started. Stay tuned to HotHardware for more from the show in the days ahead.