AMD Details High Bandwidth Memory (HBM) DRAM Tech, Pushes Over 100GB/s Per Stack

Over the past few months, a number of details regarding AMD’s next-generation Radeon 300-series graphics cards has trickled out, even though the cards aren’t due to launch for quite some time. While official details of the actual GPUs that will be used to power the cards are still scarce (though rumors abound), AMD has publicly disclosed details regarding the revolutionary High Bandwidth Memory (HBM) interface that will be used on some Radeon 300-series products, and potentially future APUs as well.

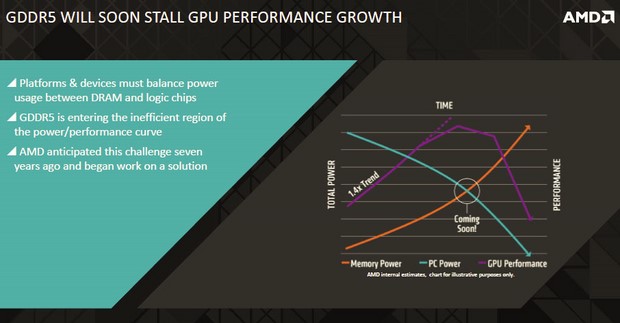

High Bandwidth Memory is designed to address a number of limitations of current GDDR5 memory implementations. With many of today’s graphics cards, a relatively large number of GDDR5 chips are necessary to offer sufficient capacity and bandwidth. The need to use a relatively large number of chips means a significant amount of PCB real estate is consumed by the memory and its associated voltage regulators. In addition, routing the traces required for a wide memory interface on a high-end graphics card significantly increases the complexity of the design. The power requirements for an off-chip interface are typically higher than an integrated solution as well.

Historically, shrinking and integrating functions onto the same piece of silicon has been used to address some of these issues. However, on-chip integration is not ideal for DRAM because it is not size or cost effective when utilizing a logic-optimized manufacturing process, like those employed for a GPU, CPU or SoC manufacturing.

In an effort to increase bandwidth, reduce real estate and power requirements, and bring the DRAM as close to possible to the logic die, (i.e. the GPU), AMD partnered up with a number of companies to help define a new specification and design new type of memory chip with low power consumption and an ultra-wide bus width. AMD worked with Hynix to originally develop the HBM standard and memory, which was eventually adopted by JEDEC (document number JESD235) in October 2013. AMD also worked to develop a key component of the technology, called the interposer, along with ASE, Amkor, and UMC. The interposer allows DRAM to be brought into close proximity with the GPU and simplifies communication and clocking, even at very wide bus widths.

HBM DRAM chips are stacked vertically, and “through-silicon vias” (TSVs) and “μbumps” are used to connect one DRAM chip to the next, and then to a logic die, and ultimately the interposer. TSVs and μbumps are also used to connect the SoC/GPU to the interposer and the entire assembly is connected onto the same package substrate. The end result is a single package on which the GPU/SoC and High Bandwidth Memory both reside.

Having everything on a single package has obvious real-estate benefits. 1GB of GDDR5 memory (comprised of four 256MB chips), requires roughly 672mm2. HBM is not only smaller than typical GDDR5 memory, but because it’s vertically stacked, that same 1GB requires only about 35mm2. According to AMD, 9900mm2 of PCB footprint are required for an AMD Radeon R9 290X GPU and its associated memory, whereas <4900mm2 is required for an upcoming HBM-based ASIC.

To summarize, High Bandwidth Memory will offer performance well beyond current GDDR5 (and DDR4), with increased power efficiency—roughly 3x the performance per watt of GDDR5, and in much smaller form factors. How much faster, smaller, and more power efficient HBM is in a real-world product implementation remains to be seen, but it won’t be much longer before we can tell you all about it. All signs point to June for AMD's next-gen GPU release, for those interested in more details, so stay tuned.